Alibaba’s latest addition to the Qwen3 family, the Qwen3‑235B‑A22B‑Instruct‑2507 model, has made waves in the open-source AI world. Released under the Apache‑2.0 license, this fully accessible model offers massive reasoning, language, and coding performance, and cements Alibaba’s status as a major rival to GPT‑4o, Claude Opus 4, DeepSeek‑V3, and Kimi K2.

The Architecture: MoE, Long Context & Dedicated Instruct Mode

- Mixture-of-Experts (MoE): The model contains 235 billion parameters, but only 8 out of 128 expert layers (≈ 22B parameters) activate per inference—delivering high capacity at lower runtime cost.

- Native 256 K context window: Supports ultra‑long texts in a single input—especially impactful for summarization, document analysis, and long-form interaction.

- Non‑Thinking Mode only: Unlike earlier hybrid variants, this version is purely instruction-text optimized; it does not emit <think> chains. Deployment is simpler and faster, with no thinking-mode activation needed.

Image: Qwen

What’s New in the Instruct‑2507 Update?

Alibaba released this version on July 21, 2025 after community feedback, focusing on separating reasoning and instruct use cases. The core improvements:

- Boosted instruction following and internal alignment, especially for open‑ended tasks.

- Enhanced domain knowledge and multilingual coverage across niche topics in 119 languages and dialects.

- Performance gains in math, science, logic reasoning, programming, and tool usage.

Also launched was an FP8 quantized version, significantly reducing memory footprint with minimal performance drop—ideal for constrained environments.

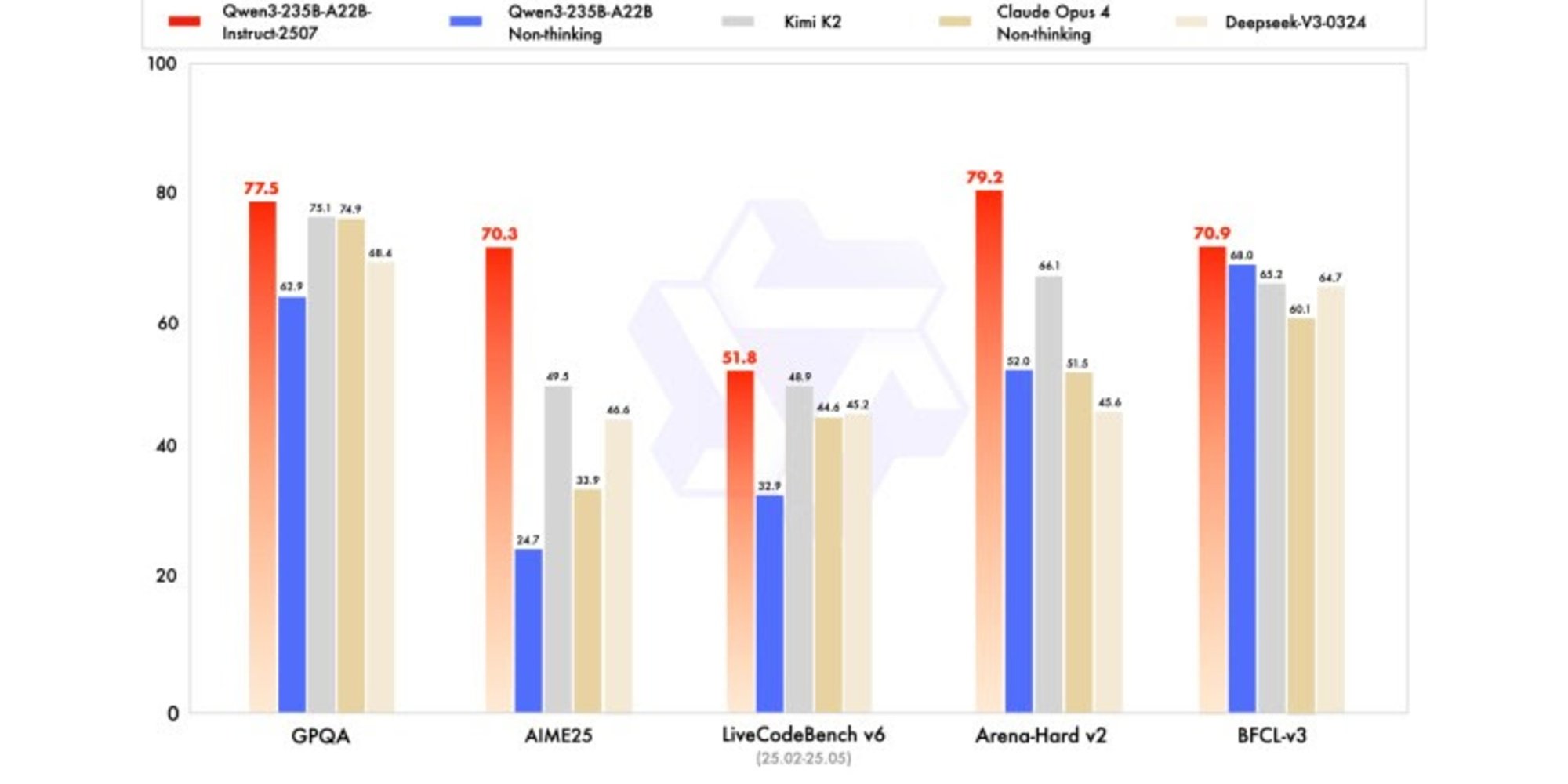

Benchmark Performance vs. Top Competitors

Qwen3‑235B‑A22B‑Instruct‑2507 delivers outstanding results, often suitable in both public benchmarks and internal evaluations:

| Benchmark | Qwen3‑2507 | GPT‑4o‑0327 | Claude Opus 4 | DeepSeek‑V3 | Kimi K2 |

| MMLU‑Pro | 83.0 | 79.8 | 86.6 | 81.2 | 75.2 |

| MMLU‑Redux | 93.1 | 91.3 | 94.2 | 90.4 | 89.2 |

| GPQA (advanced QA) | 77.5 | 66.9 | 74.9 | 68.4 | 62.9 |

| CSimpleQA | 84.3 | 60.2 | 68.0 | 71.1 | 60.8 |

| AIME25 (Math) | 70.3 | 26.7 | 33.9 | 46.6 | 24.7 |

| ZebraLogic (Logic) | 95.0 | 52.6 | — | 83.4 | 89.0 |

| LiveCodeBench v6 | 51.8 | 35.8 | 44.6 | 45.2 | 48.9 |

| MultiPL‑E (Coding) | 87.9 | 82.7 | 88.5 | 82.2 | 79.3 |

| Arena‑Hard v2 (Align) | 79.2 | 61.9 | 51.5 | 45.6 | 66.1 |

| WritingBench | 85.2 | 75.5 | 79.2 | 74.5 | 77.0 |

Qwen3‑2507 especially stands out on math (AIME), logic (ZebraLogic), and coding (MultiPL‑E)—often outperforming or matching proprietary models.

Community Feedback: Praise & Caveats

What Developers Love

- Code capabilities: Many report Qwen3‑2507 performs on par with premium models like Claude Sonnet / Opus for programming tasks.

- Local performance: On modern hardware (M‑chips, unified memory or 80 GB RAM + 8 GB GPU), the model runs smoothly at >6 tokens/sec with optimized quantized versions.

- Cost efficiency: It supports self‑hosting under Apache 2.0, enabling powerful inference without high API fees.

Reported Limitations

- Benchmark vs. real‑world gap: Some users note slight underperformance in long-term or contextually complex tasks compared to benchmark scores.

- No chain-of-thought: Without thinking mode, Qwen3‑2507 may falter on tasks requiring deep multi‑step reasoning, where reasoning models like AM‑Thinking‑v1 or Qwen3‑Thinking‑2507 excel.

- Knowledge cutoff: Like all static LLMs, its training data is dated—no live browsing or retrieval.

Getting Started: Using Qwen3‑2507 Yourself

Available now on Hugging Face, OpenRouter, and ModelScope, Qwen3‑235B‑A22B‑Instruct‑2507 is easy to access and deploy.

Deployment Tips

- Use transformers ≥ 4.51 or frameworks like vLLM, Ollama, LMStudio, and llama.cpp with GGUF quantized models.

- Sampling suitable practice: temperature=0.6, top_p=0.95, top_k=20.

- Output length: permit up to 16k or more tokens when using long context tasks.

Prompting Suggestions

For consistent instruction compliance:

- Use clear formatting: “Please follow instructions precisely. Output the final answer in one paragraph.”

- For math or multi-step tasks: “Solve step by step and then give the final result only.”

Use Cases in Practice

- Knowledge-intensive tasks: Summaries of long policies, legal reasoning, or research papers.

- Coding: Generate or review code, perform debugging, or automate task scripting.

- Multilingual translation and QA across 119 languages and dialects.

Why Qwen3‑2507 Represents AI Progress

- Open-source scale: Provides capabilities previously limited to giant proprietary models.

- Separation of capabilities: Instruct model is cleanly decoupled from Thinking variant, simplifying deployment.

- Accessibility: Deployable on-premise with permissive licensing—ideal for privacy-conscious and cost-sensitive users.

- Long-context applications: Native 256 k window opens possibilities in document analysis, book summarization, and agentic workflows.

- Benchmark dominance: Strongest scores across reasoning, coding, multilingual, and alignment tasks in its class.

Final Thoughts & Future Outlook

The launch of Qwen3‑235B‑A22B‑Instruct‑2507 is a milestone: it proves that open-source models can achieve state-of-the-art performance while remaining accessible, fast, and commercially usable. Combined with the separate Qwen3‑Thinking variant and other specialized Qwen3 models (e.g. Qwen3‑Coder, Qwen3‑MT), Alibaba is building a full-stack, open framework for AI innovation.

Expect continued rapid evolution: future releases may include agentic capabilities, RL‑fine-tuned variants, further quantization, and richer deployment tooling. Qwen3 is paving the way for a new competitive landscape in AI—one where openness, performance, and accessibility go hand in hand.

FAQ’s

What does Qwen3‑235B‑A22B‑Instruct‑2507 specialize in?

It’s a non-thinking instruction‑tuned MoE model optimized for rapid, accurate responses in logic, math, code, general knowledge, and multilingual tasks.

How big is its context window?

Supports up to 256,144 tokens natively.

How does it compare to GPT‑4o or Claude?

Outperforms GPT‑4o and DeepSeek‑V3 in math (AIME), GPQA, logic (ZebraLogic), and code benchmarks; closely trails and occasionally surpasses Claude Opus 4.

Is it free and open source?

Yes—all weights and code are under Apache‑2.0, allowing free commercial and private use.

Can I run it locally?

Yes—supported on frameworks like llama.cpp, LMStudio, vLLM, and with quantization formats like Q4_K_XL.