On July 28, 2025, Alibaba’s AI research arm unveiled Wan 2.2, a new open-source video generation model poised to challenge closed, high-cost competitors. With its introduction of a Mixture-of-Experts (MoE) architecture, flexible control over cinematic aesthetics, and compatibility with consumer GPUs, Wan 2.2 aims to make professional video generation accessible to developers, creators, and researchers worldwide.

In this article, you’ll learn:

- What Wan 2.2 is and how it differs from earlier models

- Its underlying architecture and innovations

- Available variants and their trade-offs

- How to set it up locally or via UI tools

- How it compares to closed models like Sora and KLING 2.0

- Use cases, challenges, and what the future may hold

What Is Alibaba Wan 2.2?

Wan 2.2 is Alibaba’s next-generation open video foundation model, developed under the Tongyi/Wan AI initiative. It is fully released under an Apache 2.0 license, meaning it is open for commercial and research use alike.

Unlike many video AI models locked behind APIs or proprietary stacks, Wan 2.2 exposes its code, weights, and architecture to the public. Its key improvements over Wan 2.1 include:

- MoE architecture: introducing mixture-of-experts for efficiency and capacity scaling

- Cinematic control: aesthetic metadata over lighting, composition, color, and motion

- Better motion and generalization: trained with significantly expanded data compared to Wan 2.1

- Hybrid, efficient models: a variant that supports both text-to-video and image-to-video with lighter compute profiles

Wan 2.2 supports text-to-video (T2V), image-to-video (I2V), and hybrid inputs, allowing creators to generate short video snippets (e.g. 5–10 seconds) from prompts or still images.

In the words of a technical summary, the adoption of MoE “sorts noisy from less noisy inputs” to inject expert specialization into a diffusion pipeline, boosting realism and motion control.

Architecture & Key Innovations

Mixture-of-Experts (MoE)

One of the most significant advances in Wan 2.2 is the integration of a Mixture-of-Experts architecture into the video diffusion domain. In broad terms:

- Rather than a single large dense model, Wan 2.2 employs multiple expert subnetworks, each specialized for portions of the denoising process (e.g. rough structure vs fine detail).

- A gating network routes which experts engage at which steps, thus only a subset of total parameters is active per pass. This helps preserve quality while keeping computational cost manageable.

- The model’s published repository states: “introduces a Mixture-of-Experts (MoE) architecture… enlarges overall model capacity while maintaining the same computational cost.”

- This is one of the first open-source video models to leverage MoE—a technique previously more common in large language models.

By splitting into “high-noise” and “low-noise” expert stages, Wan 2.2 can allocate heavy capacity when needed (refining details) and lighter capacity for coarse structure, thereby optimizing resource use.

Enhanced Aesthetic Controls & Data Labeling

Wan 2.2 goes beyond mere generation by incorporating rich aesthetic metadata:

- The training data is annotated with dozens of cinematic attributes: lighting style, color tone, composition rules, contrast gradients, etc.

- This gives users parameter-level control: you can specify “soft rim lighting,” “warm color grade,” or “slow camera dolly in” directives.

- The use of such metadata is intended to let creators “direct the AI like a cinematographer” rather than passively accept generated output.

Efficient Hybrid Model Variant

Wan 2.2 includes a hybrid “TI2V-5B” mode, which supports both text and image inputs. Key features:

- It supports 720p at 24 fps outputs using a compression scheme (VAE) with a latent compression ratio of 16×16×4.

- It is designed to run on mainstream GPUs (e.g. NVIDIA RTX 4090) for real-time or near real-time use.

- It aims to offer a balance between quality and accessibility, making cinematic video generation feasible on consumer hardware.

Because of this hybrid efficiency, many creators will find the TI2V variant the most practical entry point.

Model Variants & Trade-offs

Alibaba offers multiple flavors of Wan 2.2 to suit different use cases and hardware:

| Model Variant | Parameter Scale (Active / Total) | Input Modality | Target Resolution / FPS | suitable Use Case |

| T2V-A14B | 14B active / 27B total | Text → Video | Up to 720p | For creators needing maximum control and fidelity from text |

| I2V-A14B | 14B / 27B | Image → Video | Up to 720p | Turning still images into animated sequences |

| TI2V-5B | ~5B hybrid mode | Text / Image → Video | 720p @ 24 fps | For consumer hardware with flexibility between input modes |

The 14B versions deliver finer details but require greater compute. The 5B hybrid mode is more accessible and will likely see the broadest adoption for creators who want local inference.

One of the GitHub metadata pages describes the active parameter scheme and runtime design for both dense and MoE modes.

Getting Started: Installation & UI Tools

Local Setup (Command-Line)

To run Wan 2.2 locally, you can follow these general steps (adapted from its repository and community guides):

Clone the repository

git clone https://github.com/Wan-Video/Wan2.2.git

cd Wan2.2

Install dependencies

Use Python with required libraries (PyTorch version, attention modules, diffusion toolkit).

Download model weights

Use Hugging Face or provided download scripts to fetch the desired model variant (e.g. TI2V-5B or T2V/A14B).

Run inference scripts

Use bundled scripts or APIs to generate video from text or image prompts.

Detailed instructions and model cards are provided in the GitHub repo.

ComfyUI Integration

Wan 2.2 ships with day-zero support for ComfyUI, a node-based visual interface for diffusion models:

- Prebuilt workflows available for each variant (T2V, I2V, hybrid) under the “Video” templates

- Drag-and-drop nodes for model loader, prompt input, VAE decoder, scheduler, and video output

- It lowers the barrier to entry for creators who prefer GUIs over scripting

ComfyUI support makes it easier to experiment and iterate without deep engineering work.

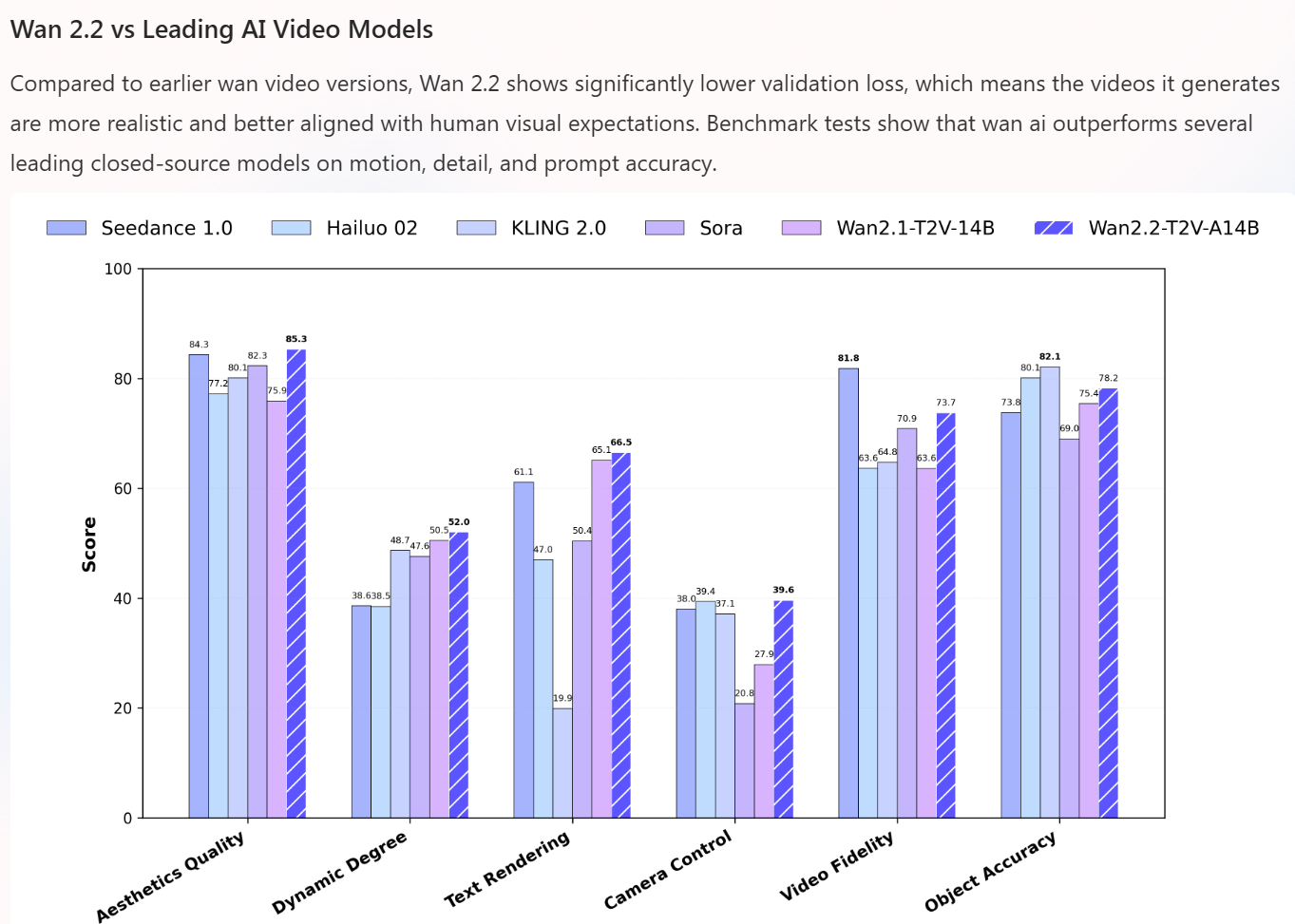

Wan 2.2 vs. Sora, KLING 2.0 & Other Video Models

How does Wan 2.2 stack up in practice against suitable closed models?

Key Advantages

Open source with full transparency

Because it is Apache-licensed and publicly released with architecture and weights, it avoids vendor lock-in.

Efficiency via MoE

Wan 2.2 can maintain or exceed quality with fewer active parameters per inference, which mitigates compute costs.

Fine artistic control

The addition of rich cinematic metadata allows users to guide composition, lighting, motion, and color more granularly than many black-box systems.

Accessibility to consumer hardware

The 5B hybrid model can run on 8 GB VRAM GPUs like the 4090 in 720p mode, making it feasible for creators without industrial clusters.

Limitations & Trade-offs vs Closed Models

- Closed models may support higher resolution outputs (1080p, 4K) or longer video clips beyond a few seconds, where Wan 2.2 may lag.

- Proprietary models might have better optimization in cloud infrastructure, yielding faster inference and scaling.

- Some closed models may support integrated video-to-video editing, style transfer, or domain-specific transformations with maturity.

However, Wan 2.2’s performance is competitive. In community reviews, participants note it outperforms rivals in motion fidelity and aesthetics while being much more accessible.

In one comparative article, Wan 2.2 is positioned as “the world’s first open-source video generation model using MoE architecture” and is argued to deliver better motion control than closed models.

A community user on Reddit remarked:

“I just tried out Wan 2.2 Animate, and the results are so convincing it’s hard to believe they’re AI-generated.”

Use Cases & Potential Applications

Wan 2.2 opens a wide array of possibilities:

- Short promotional clips, intros, ad creatives — generate cinematic motion for marketing content without a film crew

- Concept prototyping — visualize narratives or storyboards with motion from static concept art

- Social media content — animate still photography or illustrations into short looping video

- Educational & explainer visuals — use hybrid or image input to animate diagrams, maps, or illustrations

- Game design & concept art — create animated texture suggestions, cutscene prototypes, or stylized motion samples

- Artistic experiments — style transfer, aesthetic experiments, or progressive content generation

Because it is open source, Wan 2.2 can be embedded in pipelines, mobile apps, or local tools without paying cloud fees, making it especially attractive to startups, universities, and individual creators.

Challenges & Considerations

While promising, Wan 2.2 faces several challenges:

- Motion consistency & temporal coherence: ensuring smooth frame-to-frame continuity, avoiding flicker or drift, remains challenging in many models.

- Hardware ceilings: the 14 B variants may require high-end GPUs (≥16 GB VRAM) which many users don’t possess.

- Training resource costs: for organizations wanting to fine-tune or extend the model, training large MoE systems can still be expensive.

- Longer video lengths: currently suitable suited to short clips (few seconds) — generating tens of seconds or minutes may require stitching or hybrid methods.

- Ethical & misuse concerns: as with all generative video models, there is risk of misuse (deepfakes, misinformation). Open models must be paired with responsible governance.

- Optimization & deployment tooling: community effort is needed to build efficient runtime libraries, quantization, streaming decoding, etc.

Future Outlook & Roadmap

The release of Wan 2.2 is just the beginning. Potential trajectories include:

- Higher resolutions (1080p, 4K) and longer duration support

- Domain-specialized variants, such as animation, architecture, medical imaging

- Better video editing (video-to-video), interpolation, re-rendering capabilities

- Integration with large language models, e.g. video + narration generation

- Community extensions, LoRA-style fine-tuning, and plugin architectures

- Efficient runtime frameworks (e.g. quantized MoE inference, lower latency)

- Governance tools and watermarking to ensure traceability

Because Wan 2.2 is fully open and Apache-licensed, the community can directly contribute advances, optimizations, and integrations. Many believe this model may accelerate innovation more than closed monoliths.

Conclusion

Wan 2.2 is a watershed in the AI video generation landscape—a model that combines open access, technical innovation, and creator control. Its MoE architecture, cinematic metadata, and hybrid efficiency lower the barrier to high-end video synthesis.

For creators, researchers, and startups, Wan 2.2 offers a chance to build cinematic content without huge budgets or reliance on closed platforms. While challenges around motion consistency, scaling, and responsible use remain, the full open release makes those hurdles surmountable.

As the model and community evolve, Wan 2.2 could well redefine expectations for what AI video tools can do—and who can use them.

Let me know if you want a tutorial for a specific model variant, or a prompt guide tailored to cinematic genres (e.g. sci-fi, drama, documentary).

FAQs

What input types does Wan 2.2 support?

It supports text-to-video (T2V), image-to-video (I2V), and hybrid modes (text + image) for flexible creation.

Is Wan 2.2 truly open source?

Yes. The model is released under the Apache 2.0 license, and its repository includes weights, architecture, and code.

What hardware is needed?

- For TI2V-5B hybrid: ~8 GB VRAM GPU (e.g. RTX 4090) can handle 720p/24fps outputs.

- For 14B MoE versions: higher VRAM (≥16 GB) and powerful GPU setups are recommended.

- CPU, RAM, and storage also matter especially for pre/post processing.

How does Wan 2.2 compare with Sora or KLING 2.0?

While Sora and KLING may offer higher resolution or longer clips in their closed systems, Wan 2.2 outperforms them in motion control, aesthetics, and accessibility—especially because it can run locally and be customized.

Can I try Wan 2.2 online without local setup?

Yes. Some AI platforms (e.g., Media.io) have integrated Wan 2.2 video generation in browser tools for creators to experiment without hardware.