Resemble AI has launched Chatterbox Multilingual—a production-grade, open-source Text-to-Speech (TTS) engine capable of zero-shot voice cloning in 23 languages, complete with expressive controls and integrated watermarking. Distributed under a permissive MIT license, this TTS model significantly lowers the barrier for multilingual speech synthesis while advancing ethics and performance.

What Is Chatterbox Multilingual?

Chatterbox Multilingual builds on the original Chatterbox framework, introducing zero-shot voice cloning across 23 languages—all freely available under the MIT license—making it easily integrable and modifiable by developers, researchers, and hobbyists alike.

Highlights:

- Zero-shot voice cloning: Generate speech that mimics a target speaker with only a short audio snippet—no retraining needed.

- Supports languages including Arabic, Hindi, Chinese, Swahili, and many more.

- Includes emotion and intensity controls, enabling expressive delivery styles.

- Built-in PerTh watermarking (Perceptual Threshold watermark) ensures that all output audio is traceable and verifiable.

Core Features

1. Zero-Shot Voice Cloning

Chatterbox Multilingual employs a zero-shot learning framework. Developers can simply provide a short audio sample—potentially just a few seconds—to generate speech in the speaker’s voice across any of the supported languages, dramatically reducing the need for extensive voice datasets.

2. Multilingual Capability

With support for 23 languages—ranging from widely spoken to lesser-represented languages like Swahili, Danish, Finnish, Hebrew, Malay, Turkish, and more—this model offers broad global applicability.

3. Expressive Controls: Emotion & Intensity

Chatterbox Multilingual introduces emotion categories (e.g., happy, sad, angry) and an exaggeration parameter to define emotional intensity. This enables speech generation that captures nuanced tone—vital for gaming, dialogue agents, storytelling, and accessibility.

4. Responsible AI via Watermarking

Every audio output includes PerTh watermarking—an imperceptible neural watermark that enables traceability. The watermark survives typical audio manipulations like compression or editing and supports content verification via open-source detectors.

5. Competitive Quality

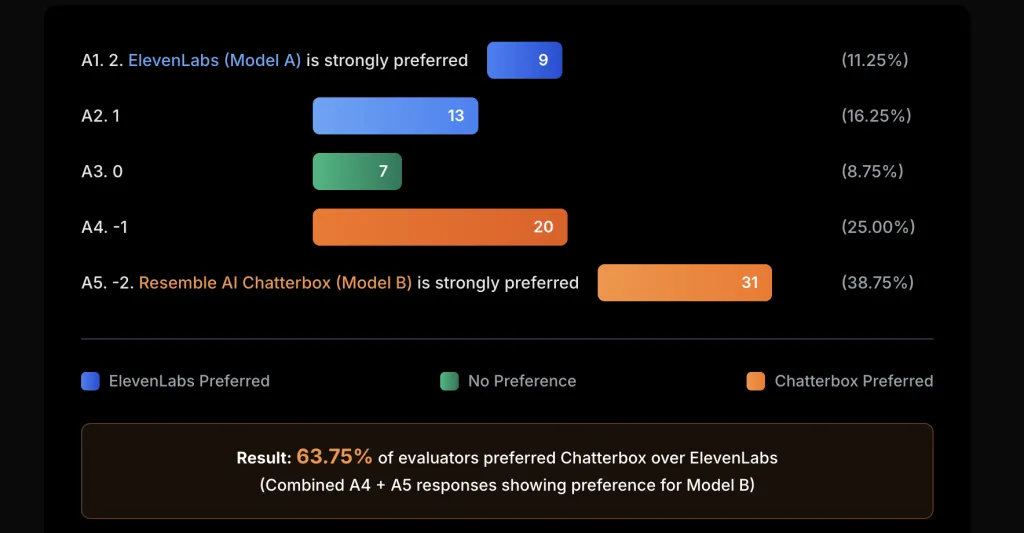

In blind A/B evaluations via Podonos, 63.75% of listeners preferred Chatterbox over ElevenLabs, indicating it delivers highly natural and appealing speech.

Image Source: Resemble AI Chatterbox

Architecture & Performance Insights

The model is built atop a 0.5B-parameter Llama backbone, trained on 500K hours of clean audio, achieving strong stability and alignment-informed generation.

Inference performance:

- Zero-shot generation latency is approximately 200 ms on strong hardware, making it suitable for real-time applications.

Model usability:

- Designed for accessibility via pip install chatterbox-tts, with Python 3.11 compatibility and example scripts for multilingual generation, Gradio interface, and voice conversion.

Expressive Control in Depth

Chatterbox Multilingual allows fine-grained emotion control:

- Emotion categories: choose “happy,” “sad,” “neutral,” etc.

- Exaggeration parameter: adjusts intensity.

- cfg_weight: adjusts voice conformity; e.g., for dramatic effect use higher exaggeration with lower cfg_weight, while the default (0.5 each) works well for balanced outputs.

Ethical Design: Watermark for Trust

The PerTh watermark is embedded into every audio file in a psychoacoustically imperceptible way. It persists across MP3 compression and audio edits and can be extracted with provided detectors—enhancing content provenance and mitigating misuse in an era of synthetic media.

Deployment Options

Open-Source Version

- Free and MIT-licensed: install locally under permissive terms.

- Ideal for experimentation, academic use, indie developers.

Chatterbox Multilingual Pro

- Enterprise-grade hosted service.

- Features include sub-200 ms latency, fine-tuned voices, service-level agreements (SLAs), and enhanced watermark compliance.

- Targeted for call centers, healthcare, financial services, and other regulated environments.

Significance of the Open-Source Release

Chatterbox Multilingual stands out by combining:

- Multilingual, zero-shot TTS

- Expressive, emotional control

- Watermarking for transparency

- Open licensing under MIT

It competes with—and in blind listener evaluations even surpasses—proprietary systems like ElevenLabs, offering a responsible, accessible, and community-driven alternative. By opening the model, Resemble AI strengthens the field of multilingual speech synthesis and enables innovation in voice-driven experiences.

Example Applications

| Use Case | Advantage of Chatterbox |

| Video Games / NPC Dialogue | Emotional realism, multilingual, fast response |

| Audiobooks | Multi-language narration with natural tone |

| Assistive Communication | Personalized, expressive voice aids for accessibility |

| Virtual Assistants / Bots | Voice personalization by user, tonal control |

| Global Customer Support | Custom brand-voice support in multiple languages |

| Security / Verification | Embedded watermark for authenticity and trace detection |

Community Insights

Reddit users highlighted:

- Smooth functionality across macOS and Windows.

- Speed improvements via optimized torch compilation (up to 24× faster non-batched inference).

- Occasional quirks—like robotic artifacts or accent transfer issues depending on reference clip—suggest areas for further tuning.

Conclusion

Chatterbox Multilingual marks a leap in TTS technology—empowering developers with a free, multilingual, expressive, and ethically designed voice synthesis tool. Whether you’re creating accessible tech, real-time dialogue agents, localized content, or interactive media—this model delivers high-quality, custom speech generation with transparency and flexibility at its core.

Its open-source availability catalyzes further research, modification, and innovation across industries, redefining how voice AI should be built—and built responsibly.

FAQs

What is Chatterbox Multilingual?

An open-source, MIT-licensed TTS model with zero-shot voice cloning across 23 languages, expressive control, and watermarking.

How to install and use it?

Install via pip install chatterbox-tts, then use Python APIs or Gradio examples to generate multilingual voice or clone voices from short audio.

How does voice cloning work?

Provide a brief audio sample. The model uses speaker embeddings to clone the voice across languages, even enabling cross-language voice transfer.

What languages are supported?

23 languages, including Arabic, Chinese, English, French, Hindi, Swahili, and more.

How expressive is the speech?

Control emotional tone and intensity via parameters—unlike many static TTS models.

What does watermarking offer?

PerTh watermarking ensures output traceability and aligns with AI ethical use guidelines.

How does it compare to commercial tools?

Listeners preferred Chatterbox in blind tests vs. ElevenLabs at a rate of 63.75%. It’s also latency-friendly and open-access.