On August 20, 2025, the Chinese AI startup DeepSeek quietly unveiled DeepSeek V3.1—an open-source, high-performing large language model (LLM) that rivals proprietary giants while dramatically lowering access barriers. Released under the MIT license, this Mixture-of-Experts (MoE) model introduces hybrid inference, expansive context handling, and agent-level tool integration. With DeepSeek V3.1, developers, researchers, and enterprises enter a new era where cutting-edge AI is cost-effective, quickly deployable, and fully transparent.

What You Need to Know: DeepSeek V3.1 Highlights

- Hybrid Inference Architecture: A single model offering both “Thinking Mode” for in-depth reasoning and “Non-Thinking Mode” for fast, direct responses.

- Top-Tier Performance: Excels in coding, logic, and math benchmarks—surpassing other open-source models and even some proprietary rivals.

- Efficient and Affordable: Up to 68× cheaper than Claude Opus for similar performance; pricing set to drop further starting September 2025.

- Fully Open-Source under MIT License: Freedom to use, adapt, self-host, and integrate without restrictions.

- Built for AI Agents: Enhanced for function calling, API-tool use, and crafting autonomous workflows.

Architecture and Design: How DeepSeek V3.1 Works

Mixture of Experts (MoE) Structure

With 685B total parameters but only 37B activated per token, the MoE architecture balances impact with compute efficiency—maximizing knowledge capacity while minimizing runtime cost.

Massive Context Window

Capable of processing 128,000 tokens, DeepSeek V3.1 supports extremely long contexts—surpassing typical models—enabling entire books or documents to be handled seamlessly. It achieved this via a two-phase retraining approach: a 630B token 32K-extension and 209B token 128K-extension, optimized with efficient formats like FP8 and UE8M0.

Hardware-Focused Engineering

DeepSeek V3 pioneered mixed-precision formats (BF16, FP8 variants) and smart load balancing across GPU clusters for efficient training and inference at a cost of just $5.576 million on Nvidia H800s.

The Innovation: Hybrid Thinking vs. Non-Thinking Modes

This capability makes the difference:

Thinking Mode (deepseek-reasoner)

- Provides chain-of-thought reasoning and deep explanations

- Ideal for complex logic, math, code debugging

- Up to 64,000 output tokens

- Higher accuracy, slightly slower response time

Non-Thinking Mode (deepseek-chat)

- Fast, succinct answers

- Support for function calling and rapid completion

- Up to 8,000 output tokens

- Optimized for latency-sensitive tasks

Image source: Deepseek

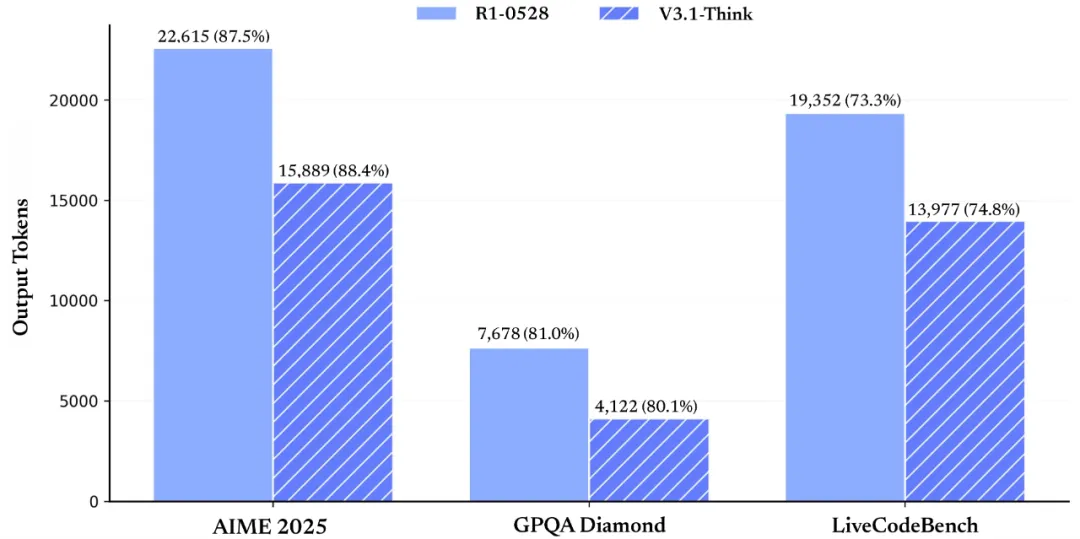

Performance Benchmarks: Where DeepSeek V3.1 Excels

DeepSeek V3.1 delivers elite performance across a range of evaluations:

| Benchmark | Non-Thinking Score | Thinking Mode Score |

| HumanEval (Coding) | 80.5% | — |

| LiveCodeBench | 56.4% | 74.8% |

| Aider Programming | 68.4% | 76.3% |

| MMLU Redux (Reasoning) | 91.8% | 93.7% |

| GPQA Diamond | 74.9% | 80.1% |

| AIME 2024 (Math) | 66.3% | 93.1% |

This places DeepSeek V3.1 on par or ahead of DeepSeek-R1 and strong proprietary competitors like Claude Opus.

Additional community tests show Intelligence and Coding Index comparable to GPT-OSS-120B, albeit with slower generation speeds.

AI Agents Made Real: Tool Use & Function Calling

DeepSeek V3.1 is structurally designed for agent-driven workflows:

- Function calling enabled in non-thinking mode for structured API interaction

- Special templates built for Code Agents, Search Agents, and Python Agents, enabling autonomous multi-step tasks. This agent focus makes DeepSeek V3.1 ideal for building automated applications that research, execute code, or interface with external systems.

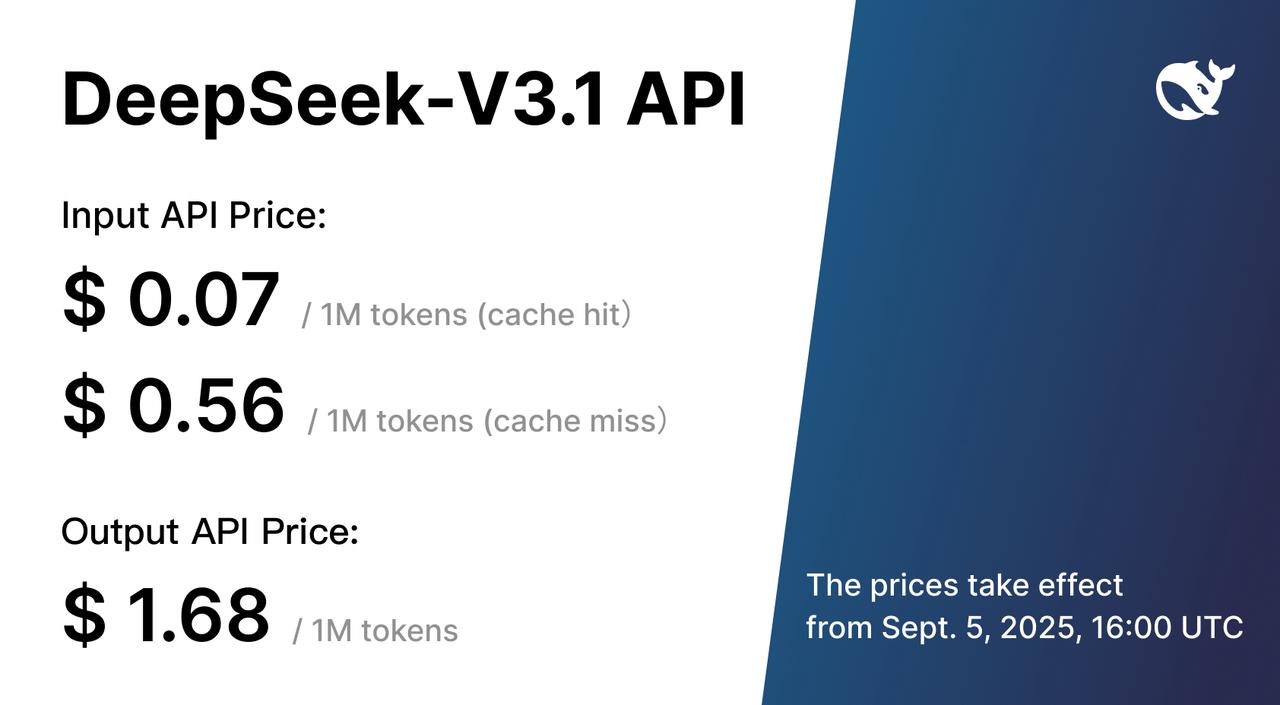

Pricing & Cost Efficiency

DeepSeek introduces a highly competitive pricing structure for API access:

- $0.07 per million input tokens (cache hit)

- $0.56 per million input tokens (cache miss)

- $1.68 per million output tokens

At an estimated $1 cost per full coding task, DeepSeek V3.1 delivers 71.6% pass rate on Aider benchmark—while Claude Opus costs around $68, with a similar score.

Self-Hosting and Local Control

True open source means you can run DeepSeek V3.1 entirely on your own hardware. Requirements are considerable:

- GPU memory: 20.8+ GB (FP4 quantized)

- RAM: 500–768 GB

- Storage: 200–400 GB depending on quantization

- Hardware: Nvidia H100/H200 for full performance (or RTX 3090 for experimentation)

Frameworks like llama.cpp support hardware-specific installations, enabling full local control and privacy.

How DeepSeek V3.1 Compares to Alternatives

| Model | License | Context | Hybrid Modes | Cost |

| DeepSeek V3.1 | MIT (Open-Source) | 128K | Yes | Lowest |

| GPT-4o (OpenAI) | Closed | 128K | — | High |

| Claude 3.5 Sonnet | Closed | 200K | — | Medium-High |

| Llama 3.1 405B | Open (Meta) | 128K | — | Free self-host |

DeepSeek V3.1 stands out for blending open-source freedom with hybrid capabilities and top-tier performance at minimal cost.

Use Cases: Who Should Use DeepSeek V3.1?

Developers & Startups

- Build agentic AI tools and MVPs affordably

- Prototype custom chatbots with access to long documentation

Enterprises & Corporations

- On-prem deployment for sensitive data control

- Automate reporting, summarization, and task workflows

Academia & Researchers

- Reproducible research with verifiable architecture

- Massive context window for large-scale analysis

Global & Multilingual Teams

- Supports 50+ languages with cross-language semantic search

- Great for global documentation and multilingual customer service

Real-World Business Impact

DeepSeek’s release already rippled across markets:

- 344B+ Chinese chipmakers like Cambricon surged after DeepSeek’s V3 chips support announcement.

- The earlier R1 model triggered stock drops in Nvidia and other U.S. tech giants amid fears over lower-cost Chinese innovation.

DeepSeek V3.1 continues to challenge traditional economic models in AI—prioritizing efficiency, transparency, and community-driven growth.

Conclusion: A New Open-Source Champion Emerges

DeepSeek V3.1 is more than an incremental upgrade—it’s a game-changer for open-source AI. With hybrid inference, massive context capacity, top-tier performance, minimal cost, and full accessibility under the MIT license, it levels the field. Whether you’re building autonomous agents, processing vast corpora, or innovating at scale—DeepSeek V3.1 offers the sophistication and freedom needed to redefine AI adoption.

The AI ecosystem is shifting: power is moving closer to developers, researchers, and teams with vision—not just deep pockets. DeepSeek V3.1 signals a future where open, affordable, and powerful AI is the rule, not the exception.

FAQs

What is DeepSeek V3.1?

An open-source, hybrid inference LLM with thinking and non-thinking modes, 128K context, and strong agent capabilities.

How is it different from V3-0324 or R1?

V3.1 introduces hybrid modes, enhanced performance (up to +40%), and superior tools support vs older versions.

How does V3.1 compare to GPT-5 or Claude 4.1?

It matches or exceeds open-source reasoning and coding; offers hybrid mode and vastly lower cost.

What are typical benchmarks?

71.6% Aider code pass rate; 93.1% on AIME math; top performance in MMLU, GPQA.

Is it safe and secure?

Open-source ensures transparency. Self-hosting gives full data control—check upcoming safety research for nuanced evaluation.