The field of NVIDIA speech AI has been evolving rapidly, and a landmark event has been witnessed in 2025. Alongside the launch of Granary, the most extensive open-source speech dataset for European languages, NVIDIA has also introduced two cutting-edge AI models—Canary-1b-v2 and Parakeet-tdt-0.6b-v3—further solidifying its role as a pioneer in shaping the future of voice technology. These innovations are not merely technical upgrades but rather two strides toward an accessible, inclusive, and high-performance speech AI for aspiring businesses, developers, and researchers across the world.

This article explores how NVIDIA is creating the future of multilingual speech AI with big guns like NVIDIA text-to-speech AI, NVIDIA speech-to-text, and NVIDIA Riva models and the ripple effects this has on conversational AI, real-time translation, and universal communication.

Why NVIDIA Speech AI Matters in 2025

Now, speech AI continues to be the core technology for customer service, healthcare, gaming, and entertainment industries. Real-world applications such as AI speech generators, NVIDIA voice changer tools, and conversational AI systems are in huge demand. But one of the main challenges has always been in accessing large and high-quality datasets, especially with lesser commonly spoken languages.

NVIDIA’s Granary dataset, combined with Canary and Parakeet models, directly addresses this challenge by opening doors to scalable, accurate, and multilingual voice solutions.

The Granary Dataset: A Foundation for Multilingual AI

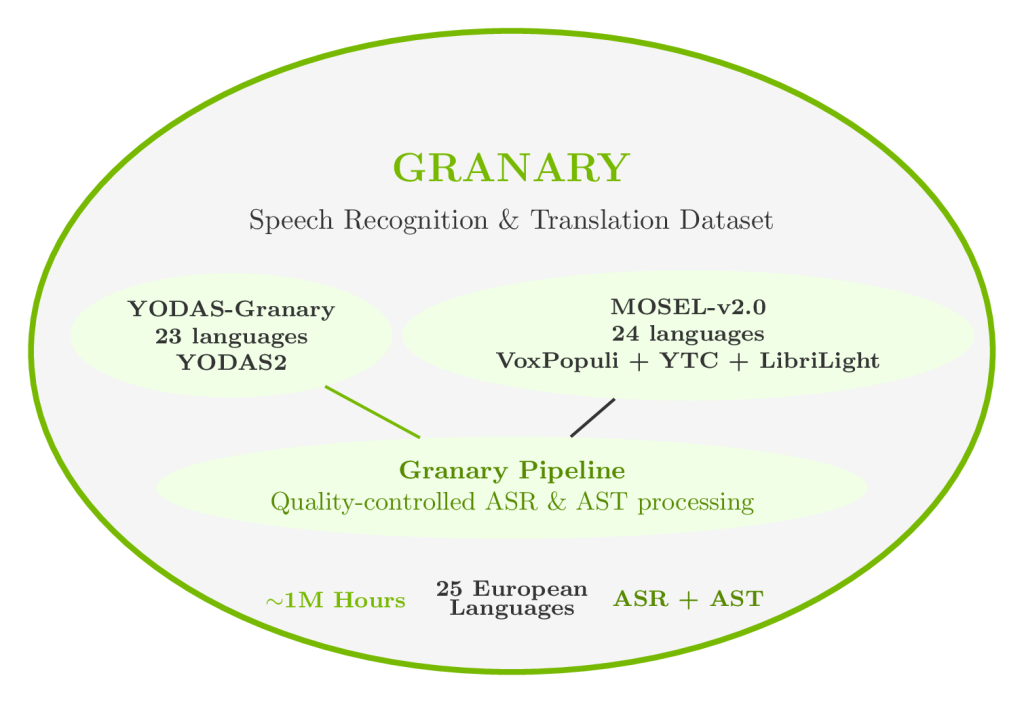

At the heart of this breakthrough is Granary, a dataset containing ~1 million hours of audio across 25 European languages. Created in collaboration with Carnegie Mellon University and Fondazione Bruno Kessler, Granary is engineered to support both Automatic Speech Recognition (ASR) and Automatic Speech Translation (AST), offering a versatile foundation for multilingual voice AI advancements.

Key Features of Granary:

- Scale: 650,000 hours for ASR and 350,000 hours for translation.

- Coverage: 25 European languages, including Maltese, Estonian, and Croatian—languages often ignored in AI research.

- Efficiency: Thanks to an innovative pseudo-labeling pipeline, developers need 50% less data to achieve the same accuracy compared to traditional datasets.

- Open-source license: Freely available under CC-BY, enabling commercial use.

- Integration: Compatible with NVIDIA NeMo Speech Data Processor for streamlined training.

Why it matters: For developers, Granary eliminates one of the most expensive bottlenecks—labeled data. Startups, researchers, and enterprises can now build competitive multilingual applications without millions in data collection costs.

Canary-1b-v2: The Multilingual All-Rounder

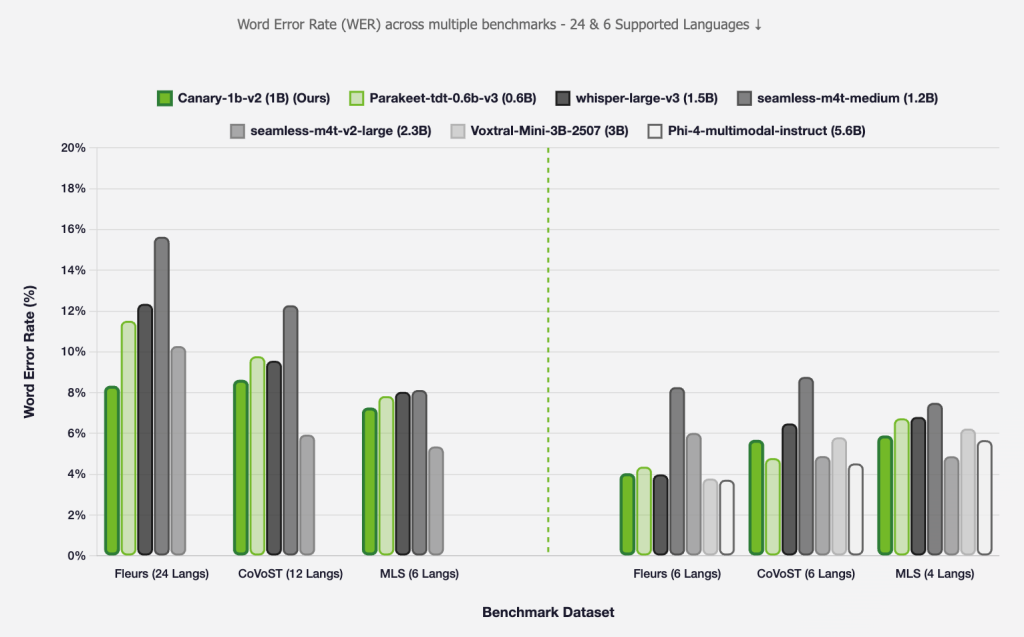

Boasting roughly 1 billion parameters, Canary-1b-v2 represents NVIDIA’s advanced encoder-decoder architecture, optimized for exceptional accuracy in both speech-to-text conversion and cross-lingual translation applications.

Core Strengths of Canary-1b-v2:

- ASR & AST: Supports 25 languages with transcription and English ↔ multilingual translation.

- Accuracy & Efficiency: Matches models three times its size while using 10x fewer compute resources.

- Advanced Formatting: Provides punctuation, capitalization, and precise timestamps—critical for subtitling and analytics.

- Translation Timestamps: A unique feature allowing synchronized translated subtitles in real time.

- Noise Robustness: Handles background noise better than most existing solutions.

Use cases:

- Real-time subtitles in international conferences.

- Customer support chatbots capable of multilingual transcription & translation.

- Media companies provide accurate subtitles in multiple European languages.

Parakeet-tdt-0.6b-v3: The Real-Time Specialist

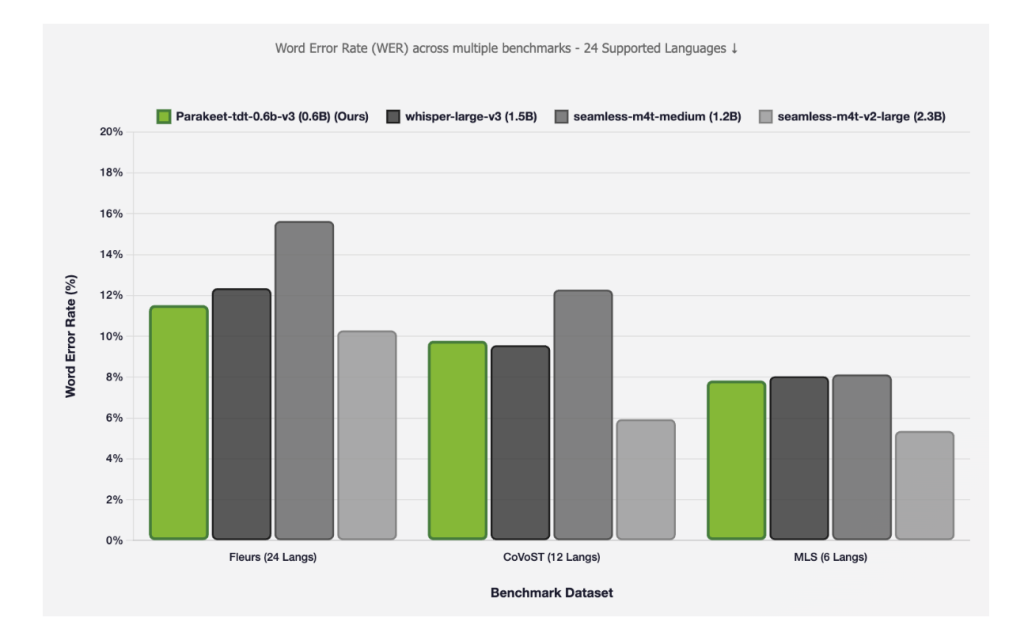

While Canary is versatile, Parakeet-tdt-0.6b-v3 is a specialist built for speed and scalability. With ~600 million parameters, it is optimized for real-time transcription.

Core Strengths of Parakeet-tdt-0.6b-v3:

- Automatic Language Recognition: Identifies the spoken language without prior input.

- Low Latency: Optimized for streaming and live scenarios.

- Long Audio Handling: Processes 24 minutes in full-attention mode and up to 3 hours with local attention.

- Robust Transcription: Handles complex speech, numbers, and noisy environments.

Use cases:

- Live event subtitling.

- Real-time transcription for podcasts and lectures.

- Telephony analysis at scale.

Canary vs. Parakeet: Choosing the Right Tool

| Feature | Canary-1b-v2 | Parakeet-tdt-0.6b-v3 |

| Primary Task | Transcription & Translation | Real-time Transcription |

| Languages | 25 | 25 |

| Unique Feature | Translation timestamps | Automatic language detection |

| suitable for | Subtitling, multilingual assistants, analytics | Live subtitling, mass transcription, streaming |

Verdict:

- Choose Canary when translation is critical.

- Choose Parakeet when real-time processing is the priority.

NVIDIA Riva: The Developer Ecosystem

All these models are integrated into NVIDIA Riva, a speech AI SDK for building real-time, production-grade applications.

With Riva, developers can:

- Deploy speech-to-text and text-to-speech AI at scale.

- Integrate NVIDIA RTX Voice for noise suppression.

- Customize voice changers and AI speech generators for entertainment and gaming.

- Build multilingual NVIDIA conversational AI assistants.

Real-World Applications of NVIDIA Speech AI

- Customer Service: Enterprises can deploy chatbots capable of handling multilingual queries instantly.

- Healthcare: Doctors can transcribe notes in their native languages in real time.

- Gaming & Entertainment: Streamers use RTX Voice and NVIDIA voice changer tools to enhance live interactions.

- Education: Universities can provide multilingual lecture transcriptions and instant translations.

- Media & Journalism: News agencies can caption broadcasts in multiple languages simultaneously.

Strategic Implications for Europe and Beyond

It is more than a pure technological release: it is an NVIDIA strategic effort in promoting European digital sovereignty. The company decreases its dependency on English-based paradigms like OpenAI Whisper by providing open-source tools in the native languages of Europe.

This democratization allows:

- Startups in smaller countries to compete globally.

- Governments and institutions to preserve linguistic diversity.

- Enterprises to build AI ecosystems optimized for NVIDIA GPUs, fueling hardware demand.

Getting Started with NVIDIA Speech AI

Developers can begin experimenting with these models via the NVIDIA NeMo Toolkit. With a simple installation, they can load Canary or Parakeet, process audio, and deploy speech services—without deep AI expertise.

Example workflow:

- Install NeMo.

- Load Canary-1b-v2 or Parakeet-tdt-0.6b-v3.

- Run transcription or translation with a single command.

- Integrate results into chatbots, translation services, or streaming apps.

Final Thoughts

The releases of Granary, Canary-1b-v2, and Parakeet-tdt-0.6b-v3 mark a defining moment in NVIDIA speech AI. By resolving the long-standing problem of multilingual data scarcity and opening up massive and high-performance models for general use, NVIDIA has enabled developers, enterprises, and researchers to design applications that are intelligent, and fast, something, and hence, inclusive.

Be it through NVIDIA TTS AI, speech engines to convert speech back to text, AI speech-generators, or RTX Voice integration, the impact will be felt throughout all sectors-from customer support to games.

This is more than an upgrade; it’s the beginning of a new chapter in conversational AI—one where language barriers in Europe and beyond are not just reduced but eliminated.