Introduction: A New Type of Vision Language Model

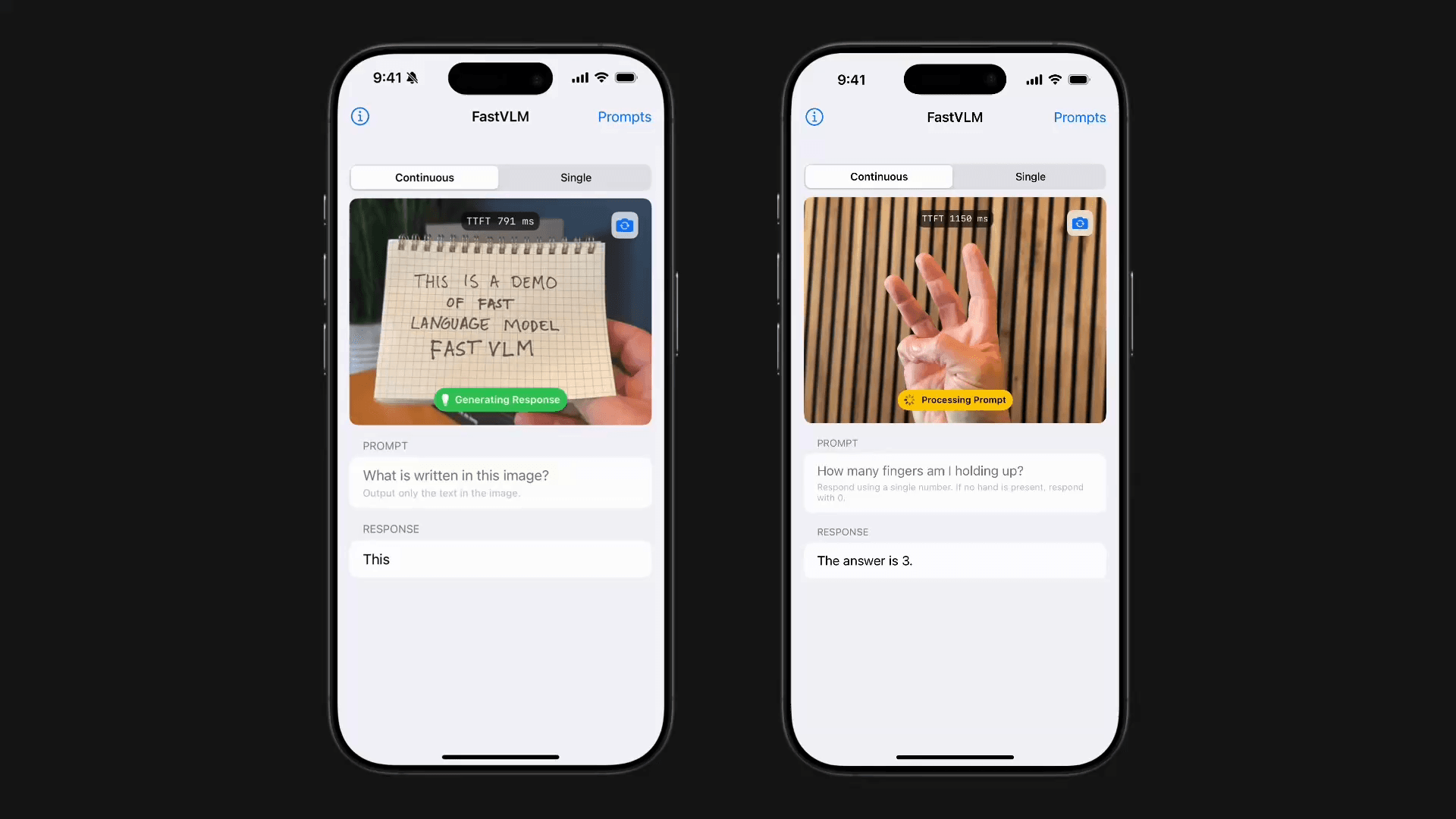

Vision Language Models (VLMs) are at the forefront of multimodal AI—understanding both images and text. A new type of Vision Language Model, FastVLM, introduced by Apple researchers at CVPR 2025, pushes the boundaries of VLM performance. Designed for real-time VLM applications and on-device VLM efficiency, FastVLM dramatically improves the FastVLM accuracy latency tradeoff without sacrificing the ability to handle high resolution image processing.

Image Source: Apple

The Accuracy–Latency Conundrum in Vision Language Models

Traditional VLMs see accuracy rise with image resolution—especially in tasks like document analysis, UI navigation, and fine-grain scene comprehension. However, higher resolutions escalate the time-to-first-token (TTFT)—the sum of vision encoder latency plus LLM pre-filling time—posing a practical barrier when striving for real-time performance or privacy-preserving on-device VLM apps.

Enter FastVLM, which addresses this trade-off using a hybrid vision encoder and an architecture called FastViTHD, optimized for high-resolution inputs. This enables a new level of hybrid vision encoders for VLMs, delivering both speed and accuracy.

FastViTHD Architecture Explained: Hybrid Design for Optimal Performance

FastViTHD—short for “Fast Vision Transformer High-Definition”—is the backbone of FastVLM. It combines convolutional and transformer blocks in a five-stage encoder design. The first three stages use convolutional “RepMixer” blocks; the final two leverage multi-headed self-attention. Strategically, an extra downsampling stage ensures that even at high resolutions, the number of tokens is greatly reduced—4× fewer than FastViT and 16× fewer than ViT-L/14—while maintaining low latency.

Moreover, integrating multi-scale features (via depthwise convolutions rather than average pooling) boosts accuracy on VLM benchmarks such as TextVQA or DocumentVQA—all while keeping encoding times low.

Visual Token Quality in FastVLM

FastViTHD generates higher-quality visual tokens, enabling significantly reduced dependence on token pruning methods. These high-fidelity tokens simplify the MLP projection layer for visual tokens to the LLM-embedding space—no need for complex merging or compression strategies.

FastVLM Accuracy vs. Latency Tradeoff

FastVLM, powered by FastViTHD, demonstrates suitable-in-class accuracy latency trade-off in vision language models:

- 85× faster TTFT compared to LLaVA-OneVision (0.5B LLM) at 1152×1152 resolution, with equivalent accuracy.

- On the same benchmarks (SeedBench, MMMU), FastVLM performs comparably while using a 3.4× smaller vision encoder.

- Even larger variants (with Qwen2-7B LLM) outpace Cambrian-1-8B models with 7.9× faster TTFT.

This demonstrates an unmatched accuracy latency tradeoff in VLMs, balancing fast response with high-fidelity understanding.

Comparing FastVLM vs. Token Pruning, Dynamic Tiling (AnyRes), and Traditional Architectures

FastVLM vs. Token Pruning Methods

Token pruning or merging techniques (e.g., reducing visual tokens heuristically) often incur significant architectural complexity. FastVLM, by contrast, inherently avoids this through FastViTHD’s efficient encoder design—yielding strong performance with a more elegant architecture.

FastVLM vs. Dynamic Tiling (AnyRes)

Dynamic tiling splits high-res images into tiles, processes each separately, and merges tokens. While useful, it often increases latency due to repeated encoding. The authors show that FastVLM without tiling outperforms tile-based approaches in accuracy-latency trade-offs—even at very high resolutions. Only at extreme resolutions does combining FastVLM with AnyRes yield marginal gain.

FastVLM vs. Traditional VLMs

Compared to popular VLMs:

- LLava-OneVision (0.5B): FastVLM is 85× faster and 3.4× smaller.

- SmolVLM (~0.5B): FastVLM is 5.2× faster.

- Cambrian-1 (7B): FastVLM is 21× faster, with better or comparable performance metrics.

FastVLM vs FastViT vs ConvNeXT: Architectural Comparison

FastViT is a prior hybrid architecture combining convolutional and transformer paths with strong speed-accuracy performance. It uses structural reparameterization and RepMixer layers to achieve fast, accurate, and efficient image understanding on mobile hardware.

FastViTHD builds upon FastViT—adding architectural elements for high-resolution VLMs:

- Extra downsampling stage

- Multi-scale features

- Optimized token reduction and latency.

Empirical comparisons show FastViTHD steeply outperforms both FastViT and ConvNeXT in high-resolution contexts, achieving lower vision latency and higher retrieval performance.

Time-to-First-Token and On-Device VLM Efficiency

FastVLM significantly reduces time to first token (TTFT): the critical latency metric in VLM pipelines encompassing the encoder and initial LLM response. With FastViTHD, TTFT is cut by a factor of 3× to 85×, depending on resolution and model variant, making real-time VLM applications feasible on devices.

On-Device Demonstrations

Apple released an iOS/macOS demo app via MLX, proving FastVLM operates efficiently on Apple Silicon with minimal lag—ideal for privacy preserving on-device VLM apps such as assistants, UI navigation, and AR tools.

Real-Time VLM Applications & Use Cases

Because of its low latency and strong accuracy, FastVLM enables numerous real-time VLM applications:

- Accessibility assistants: On-device, quick description of scenes and text for visually impaired users.

- UI navigation and robotics: Fast visual command interpretation without cloud lag.

- Real-time gaming: High-res scene understanding for AR/VR assistants.

- Document analysis: Accurate processing of legal pages or contracts with high-resolution detail.

- Privacy-sensitive tasks: On-device performance ensuring user data never leaves the device.

Scalability: Balancing LLM Size vs Image Resolution

FastVLM research explores the scaling LLM size vs image resolution tradeoff. Using multiple LLM decoders (0.5B, 1.5B, 7B), they compute Pareto-optimal curves, identifying the suitable performance for given TTFT budgets.

Key takeaway: combining moderate image resolution with an appropriately sized LLM often outperforms simply increasing resolution. FastVLM achieves up to 3× faster runtime for the same accuracy compared to traditional setups.

Technical Components Breakdown

- FastVLM architecture: Vision encoder (FastViTHD) + MLP projection + LLM decoder.

- FastViTHD backbone design: 5 stages with convolutional and transformer layers; downsampling optimized for token and latency reduction.

- Hybrid convolutional transformer vision encoders: FastViTHD is an example, combining Conv + Transformer to enhance image resolution processing efficiency.

- MLP projection layer: Maps visual tokens from vision encoder into LLM embedding space seamlessly.

- Visual token quality: Higher from FastViTHD, reducing need for token pruning or merging.

Comparative Summary

| Feature / Comparison | FastVLM (with FastViTHD) | Competing Architectures |

|---|---|---|

| TTFT Speedup | Up to 85× faster | LLaVA-OneVision, Cambrian-1 much slower |

| Vision Encoder Size | 3.4× smaller | LLaVA-OneVision, ViT-L/14 are large |

| Hybrid Encoders | FastViTHD (Conv + Transformer) | ViT-L/14 (Transformer), ConvNeXT (Conv only) |

| Token Pruning | No pruning required | Many methods require complex token merges |

| Tile-based Processing (AnyRes) | Not needed; only helps at extreme resolutions | Useful but often slower overall |

| Scalability (LLM vs Resolution) | Pareto-optimal balance possible | Often suboptimal / inefficient combinations |

| On-Device Feasibility | Demonstrated via iOS/macOS MLX app | Often cloud or high-latency focused |

Conclusion: FastVLM Paves the Way for Agile, Accurate Visual AI

FastVLM marks a milestone in the evolution of Vision Language Models. By addressing the accuracy latency tradeoff, especially in high-resolution image understanding, it enables real-time, on-device, and privacy-safe VLM applications for everyday use.

From accessibility assistants and robotic control to document analysis and real-time gaming, FastVLM unlocks a spectrum of powerful use cases—bridging the gap between performance and efficiency without compromise.

FAQs

What is FastVLM?

FastVLM is a novel vision-language model that enables processing much higher-resolution images but much faster than previous models without sacrificing accuracy, which makes it suitable for real-time and on-device applications.

How does FastViTHD improve performance?

FastViTHD combines convolution and transformer layers with smart token reduction to speed up image processing while maintaining strong accuracy, even at high resolutions.

Why is time-to-first-token (TTFT) important?

TTFT reflects how quickly a model can start generating text after seeing an image. FastVLM significantly reduces this latency, providing seamless and immediate responsiveness in real-world applications.

Can FastVLM run on devices like phones or laptops?

Yes, it runs efficiently on Apple Silicon devices, supporting fast, privacy-friendly applications without needing cloud processing.

What are common uses for FastVLM?

FastVLM works well in accessibility tools, UI navigation, document analysis, AR/VR gaming, and any scenario needing fast, accurate image understanding on-device.