Machine learning is moving from experimental areas to everyday business software. By 2026, it is predicted that Machine learning will reshape itself and will become more embedded in decision-making. Businesses are now relying more on models not just for predicting the future but also for real-time operational choices that can critically affect revenue, customer experience and growth. Due to such a shift, understanding the different behaviour of Machine Learning Algorithms and where each one excels has become highly essential for businesses.

In this guide, we will be explaining to you the most relevant words used within industries and will be showcasing them to you with accuracy, clarity and a practical viewpoint.

What Is a Machine Learning Algorithm?

A Machine Learning Algorithm basically refers to the specific method that studies data, verifies patterns and uses them to classify or predict new information. A simple example of such an algorithm is a house price predictor that analyses past sales. These algorithms are highly important because they strategically define how quickly and accurately a model evaluates, learns and supports the decision in a real-world workflow.

How Machine Learning Algorithms Work

Several ML algorithms come with one common process, although each one manages data differently. In this process, you start with the raw information, then clean it, and eventually turn it into a usable feature. Right after this process, the model gets trained on a portion of the data and gets tested on the remaining part, and if the performance goes well, the model is moved into production.

A basic workflow can be visualised like this:

Data → Feature Selection → Algorithm Choice → Training → Testing → Evaluation

Training teaches the system how different features connect with labels. Testing checks whether those relationships hold up on new examples. Clean data, correct metrics and the right method all influence the final reliability of any machine learning solution.

Top 10 Machine Learning Algorithms

Before diving into the detailed explanations, here are the ten algorithms covered in this guide, along with their defining advantages:

- Linear Regression – Quick forecasts and relationship modelling.

- Logistic Regression – Best Algorithms for binary and multi-class classification.

- Decision Trees – Easy to visualise and simple to interpret.

- Random Forest – Offers higher accuracy, making it best for messy databases.

- Support Vector Machines (SVM) – Effective in high-dimensional problems.

- K-Means Clustering – Strong unsupervised performance and simple grouping.

- K-Nearest Neighbours (KNN) – Useful for similarity-based predictions.

- Naïve Bayes – Fast and accurate for text-focused tasks.

- Gradient Boosting Machines (GBM) – Competition-grade accuracy.

- Neural Networks – Backbone of modern deep learning algorithms.

Below is an in-depth look at each method, where it fits and how teams in 2026 are using it.

1. Linear Regression

Linear Regression remains a practical starting point for forecasting problems. It maps the relationship between variables in a straight-line manner, making it incredibly easy to interpret. Analysts still use it in sales forecasting, marketing budgeting and early-stage trend analysis. Even when teams plan to move toward advanced modelling, Linear Regression often acts as the baseline to compare future improvements.

2. Logistic Regression

Logistic regression specifically handles classification problems, predicting something that belongs to a certain group. The probability-based output that it offers helps businesses understand the likelihood of outcomes, eventually making it beneficial in areas like customer churn, credit approval and easy decision detections. Businesses still favour it in 2026 because of its transparency and simple parameter tuning.

3. Decision Trees

Decision Trees represent data in a branching structure, breaking complex decisions into step-by-step paths. This makes them extremely easy to explain to business leaders. The method works well with both numerical and categorical variables and can manage missing values without needing heavy preprocessing. Industries like healthcare and banking still rely on Decision Trees when model interpretability is non-negotiable.

4. Random Forest

Random Forest builds many decision trees and merges their predictions to form an outcome. This approach helps reduce overfitting, which is a common issue with single trees. Because of its stability and reliable accuracy, it’s now widely used in fraud detection, loan approval scoring, insurance modelling and product recommendation systems. It handles noisy inputs much better than most traditional Machine Learning Algorithms.

5. Support Vector Machines (SVM)

Support Vector Machines look for the best boundary that separates classes. SVMs perform exceptionally well when the data has many features, which is why they’ve maintained strong relevance in fields like genetics, text classification and even some computer-vision tasks. With kernel options, SVMs adapt to nonlinear patterns, giving them an edge over simpler linear models.

6. K-Means Clustering

K-Means is one of the most common unsupervised ML algorithms. It groups data points based on similarity, usually by minimising the distance between points and the cluster centre. Companies rely on it for audience segmentation, customer profiling, business zoning, inventory categorisation and operational grouping. It remains a favourite because it’s easy to implement and scales well across large datasets.

7. K-Nearest Neighbours (KNN)

KNN predicts based on how close new data points are to existing examples. It works best in scenarios where similar items appear near each other in the feature space. E-commerce companies use KNN for recommendations, while medical centres use it for classifying patient symptoms. It doesn’t require explicit training, which means teams can build functional models quickly when deployment needs are urgent.

8. Naïve Bayes

Naïve Bayes specifically runs on the basis of assumptions and probabilities and analysis that features act independently from one another. Even with such simplifications, it still works strategically well, especially in the case of spam detections, sentiment analysis and short text classifications. Startups often rely on Naïve Bayes for lightweight applications in which resources such as chat triaging, smart filters and quick content tagging are limited.

9. Gradient Boosting Machines (GBM)

GBM and its popular variants like XGBoost, CatBoost and LightGBM form one of the most accurate categories of Machine Learning Algorithms today. These methods train multiple weak learners sequentially, improving errors at each step. Financial modelling, fraud analytics and credit scoring teams depend heavily on GBM because it captures non-linear trends with impressive precision. Despite its longer training time, GBM continues to lead performance benchmarks for structured data.

10. Neural Networks

Neural Networks form the foundation for several deep learning algorithms, including CNNs, RNNs, LSTMs and Transformer-based architectures. These models excel at tasks like speech interpretation, autonomous driving signals, medical imaging and natural language understanding. Although they require more computing power, Neural Networks remain unmatched in accuracy when datasets are large and patterns are complex. In 2026, almost every modern AI platform integrates neural-based components somewhere within its pipeline.

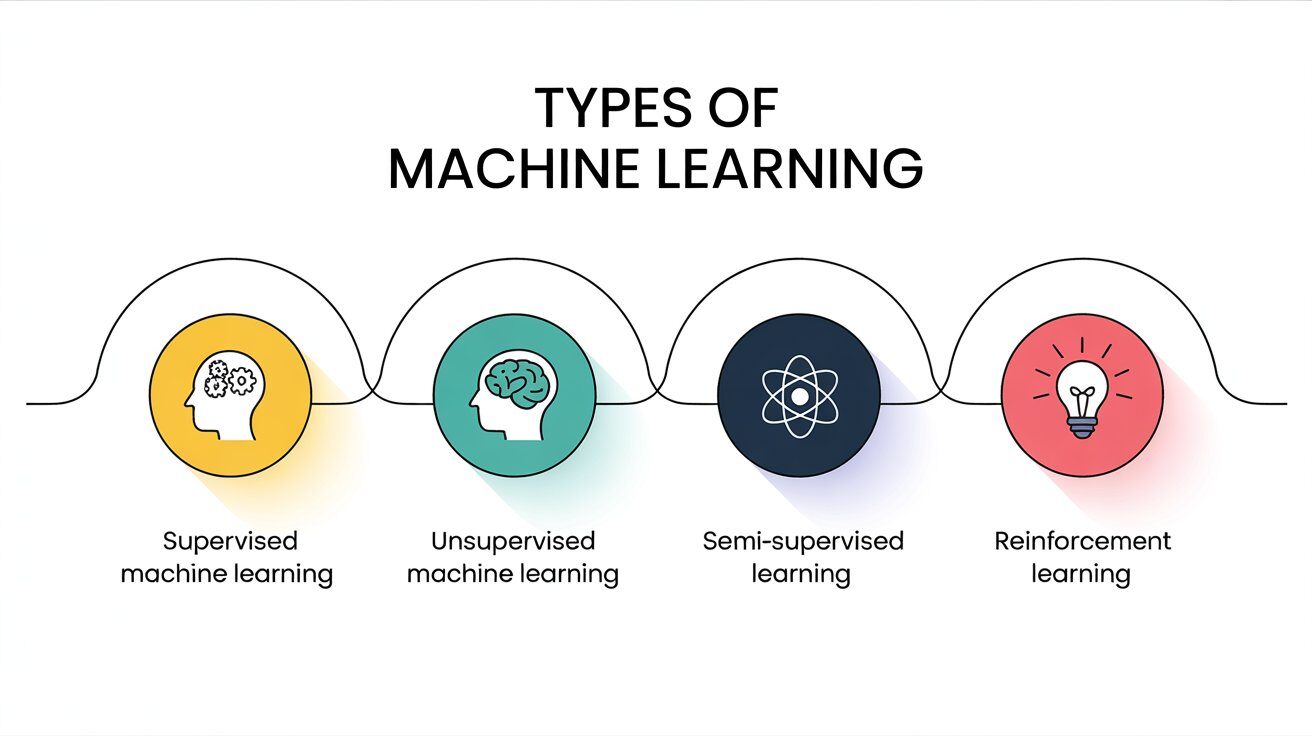

Types of Machine Learning Algorithms

Most methods fall into one of the following categories:

- Supervised Learning – Uses labelled data (e.g., Linear Regression, Random Forest).

- Unsupervised Learning – Works with unlabelled data (e.g., K-Means).

- Semi-Supervised Learning – Mixes labelled and unlabelled inputs.

- Reinforcement Learning – Systems learn by taking actions and receiving rewards.

Knowing the type helps narrow down the correct approach before building a full system.

Choosing the Right Algorithm

Selecting the right method isn’t always straightforward. Here are some decision factors often used by teams:

- Dataset size

- Type of problem (classification, regression, clustering)

- Need for interpretability

- Required accuracy

- Training time

- Operational constraints

- Real-time requirements

Comparison Table

| Algorithm | Accuracy | Training Time | Interpretability | Primary Strength |

| Linear Regression | Medium | Fast | High | Forecasting |

| Logistic Regression | Medium | Fast | High | Simple classification |

| Decision Tree | Medium | Medium | High | Rule-based decisions |

| Random Forest | High | Medium | Medium | Complex predictions |

| SVM | High | Slow | Medium | High-dimensional data |

| K-Means | Medium | Fast | Medium | Pattern grouping |

| KNN | Medium | Slow (inference) | Low | Similarity checks |

| Naïve Bayes | Medium | Fast | Medium | Text classification |

| GBM | Very High | Slow | Low | Structured datasets |

| Neural Networks | Very High | Slow | Low | Deep learning tasks |

Examples of Choosing One Algorithm Over Another

- For small datasets with a clear decision boundary, Logistic Regression often works better than complex systems.

- When relationships are complicated, Random Forest or GBM is usually the safer bet.

- For text classification, Naïve Bayes tends to outperform others with far less computing effort.

- For visual or speech problems, Neural Networks are generally the top choice.

Real-World Examples

1. Fraud Detection

Banks often combine Random Forest and GBM to detect unusual spending behaviour. These methods capture subtle changes in transactions better than traditional linear models.

2. Recommendation Systems

Platforms like streaming services or e-commerce stores mix Neural Networks and KNN to suggest relevant items. The similarity-based nature of KNN helps refine recommendations.

3. Image Classification

Neural Networks dominate large-scale image recognition tasks, although SVM can still excel on smaller datasets.

4. Customer Churn Prediction

Logistic Regression, GBM and Random Forest are widely used to identify signals that indicate a customer is about to leave.

Performance Comparison

| Algorithm | Accuracy | Memory Use | Real-Time Use | Ideal Application |

| Linear Regression | Moderate | Low | Yes | Trend forecasting |

| Logistic Regression | Moderate | Low | Yes | Binary decisions |

| Decision Tree | Moderate | Low | Yes | Transparent decisions |

| Random Forest | High | Medium | Limited | Multi-variable tasks |

| SVM | High | Medium | Limited | High-dimensional data |

| K-Means | Medium | Low | Yes | Clustering |

| KNN | Medium | High | No | Similarity predictions |

| Naïve Bayes | Medium | Low | Yes | Text tasks |

| GBM | Very High | High | No | Data competitions |

| Neural Networks | Very High | High | Limited | Deep learning algorithms |

Common Mistakes Beginners Make

- Overfitting by training too long or tuning poorly.

- Wrong evaluation metrics, especially when using accuracy in imbalanced datasets.

- Skipping preprocessing, which affects almost every algorithm negatively.

- Using overly complex models when simpler ones could perform just as well.

- Ignoring interpretability, especially in fields that require transparent decision rules.

Conclusion

Selecting the right Machine Learning Algorithms has now become an important decision, as the core of the business truly depends on accurate prediction when it comes to real operations. While neural systems lead the way for image, speech and other critical inputs, Traditional Machine learning algorithms are still dominating healthcare, finance and business analytics, just because of their ability to offer clarity and dependability. The core motive is to match the algorithm to the complexity of your problem, the size of your data, and the amount of interpretability you require. With the help of making the right balance, these 10 methodologies will continue to power the most effective machine learning applicability, especially in the year 2026.

FAQs

Which ML algorithm is best for beginners?

Linear Regression and Logistic Regression are the easiest to start with because they’re simple and reliable.

Is deep learning also an algorithm?

Deep learning refers to a group of methods built mainly around Neural Networks.

What is the easiest ML algorithm to use?

Naïve Bayes is often the simplest because it trains quickly and works well on text.

Which algorithm works best for classification?

Random Forest, SVM and GBM typically achieve high accuracy for classification problems.

What’s the difference between supervised and unsupervised learning?

Supervised learning works with labelled data, while unsupervised learning looks for patterns without predefined labels.