The NVIDIA H200 specifically mentions a high-end, data-centric GPU designed for gen AI, high-performance computing and large language models. It is built on the Hopper architecture and is considered the first-ever NVIDIA GPU to ship with 141 GB. It is also built of HBM3e memory and a large 4.8 TB/s memory. This device eventually enables large-scale AI model interfacing or training and HPC workload.

In this blog, we will compare it to its predecessor, which eventually delivers significantly higher throughput and larger memory capacity. This also makes it an ideal choice for massive model deployments and inference at scale.

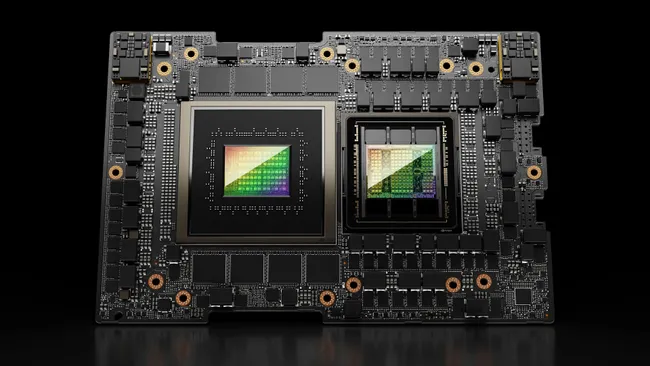

Image credit: Nvidia

Key Specifications (NVIDIA H200 Specs)

| Spec / Feature | H200 SXM / H200 NVL (data-centre GPU) |

| GPU Memory | 141 GB HBM3e |

| Memory Bandwidth | 4.8 TB/s |

| FP8 Tensor Performance | Up to ~ 4 PetaFLOPS |

| FP16 / BFLOAT16 / FP32 Performance | Very high — optimised for AI workloads |

| Form Factors | SXM (server-grade) or NVL/PCIe versions |

| Power Consumption (TDP) | Up to 700 W (SXM) or up to 600 W (NVL/PCIe), depending on configuration |

| Interconnect / Multi-GPU | NVLink (900 GB/s) + PCIe Gen5 (128 GB/s) |

In some spec sheets, the GPU core count is given as 16,896 shading units (commonly referred to as “cores”) for the SXM/PCIe SKUs.

This hardware makes H200 a powerhouse for inference-heavy tasks, large-scale LLM deployment, and compute-intensive HPC. In NVIDIA’s own benchmarks, H200 delivers roughly 2× faster inference for large models compared to previous-generation H100 GPUs.

Release Timeline & Market Availability (H200 Release Date)

- According to NVIDIA, the H200 was made available to global system integrators and cloud-service providers beginning in Q2 2024.

- It has since been adopted by major cloud providers and data centres for both AI training/inference and HPC workloads.

Pricing Landscape (What is the NVIDIA H200 Chip Price?)

- Direct public price lists for H200 are not widely published; however, earlier-generation GPU chips (e.g., H100) were priced in the US$25,000–40,000 range for data-centre configurations, according to some market estimates.

- Given H200’s higher performance and enterprise-grade positioning, it likely commands a premium over predecessors, especially when deployed in multi-GPU server configurations.

- For most users, H200 will primarily be available through cloud-GPU instances or enterprise data-centre agreements rather than direct retail purchase.

Thus, an exact retail price is difficult to pin down; the H200 is firmly positioned as a high-end, enterprise-class AI GPU.

Latest Policy & Geopolitical Update: Export to China Approved

A major recent development: as of December 2025, the U.S. government under Donald Trump has approved exports of the H200 chip to “approved customers” in China — reversing previous restrictions.

Key details of the decision:

- Exports allowed under review by the U.S. Department of Commerce.

- Sales to China are subject to a 25% fee/tariff assessed by the U.S. government.

- More advanced chips (such as NVIDIA’s upcoming Blackwell-generation GPUs) remain excluded from the export allowance.

This decision reopens a major market (China) for H200, which could influence global supply, pricing, and deployment strategies — especially for cloud providers and enterprises working across regions.

Why H200 Matters: Use Cases & Significance

Large-Scale AI / LLM Inference & Training

Thanks to its 141 GB HBM3e memory and high throughput, H200 excels at running large language models or generative AI workloads. For example, it can handle massive LLMs that require large memory and compute — ideal for enterprises working on generative AI, AI-as-a-service, or high-performance inference systems.

High-Performance Computing (HPC) & Scientific Workloads

Beyond AI, H200’s massive memory bandwidth and compute power make it suitable for scientific simulations, data analytics at scale, physics modelling, and other HPC tasks where both memory throughput and parallel compute matter.

Cloud & Enterprise Deployments at Scale

Because of its performance and memory capacity, H200 is well-suited for deployment in multi-GPU servers, cloud-based AI instances, or data-center clusters. Large companies and cloud providers benefit from its high throughput and memory to support heavy workloads with fewer GPUs.

Considerations & Trade-offs

- Power Consumption & Cooling: With a TDP up to 700 W for SXM variants, H200 deployments require robust power delivery and cooling infrastructure, high cost and infrastructure considerations.

- Cost and Access: Given its enterprise positioning and likely high price, H200 may be accessible primarily to organisations, cloud service providers, or research institutions rather than individual buyers.

- Geopolitical/Export Restrictions Impact: Availability for certain regions (or export to China) may be subject to government regulations and export-control policies, as recent developments show. This can affect supply, pricing, and the timeframe for adoption.

What the Recent Export Approval Means for the Industry

- Resumed Access for Chinese Cloud / AI Firms: Chinese enterprises and cloud providers may again obtain H200 GPUs, potentially boosting AI and HPC capabilities in China — though under strict oversight and with an extra 25% export fee.

- Global Market Impact: Opening China again as a significant market may increase overall demand for H200, potentially affecting global supply, lead times, and enterprise procurement strategies.

- Strategic Positioning vs New Chips: While H200 is now authorised for export, more advanced chips from NVIDIA (e.g., Blackwell) remain restricted — meaning H200 may become the de facto “global flagship” for now.

Conclusion

This NVIDIA H200 chip is considered one of the most powerful HPC GPUs and AIs that are available currently. These chips offer massive memory and are sustainable for large-scale interfaces, scientific workloads and training. This product is specifically released for global deployment in MD 2024, which is now gaining renowned significance in evolving geopolitical and expert control developments.

For businesses, AI-heavy organisations, and cloud providers, H200 offers a massive foundation for generative AI, HPC and large module interfaces. But deployment of such software must factor in power and the supply chain. Casting and regulatory considerations.

With the recent approval for exports to China, H200’s global footprint may expand further, potentially reshaping how high-end AI infrastructure is distributed worldwide.

FAQs

When was the NVIDIA H200 released?

The H200 became available globally (via system manufacturers and cloud providers) starting in Q2 2024.

What are the key specs (memory, power, cores) of H200?

H200 features 141 GB HBM3e memory, 4.8 TB/s bandwidth, up to ~4 PetaFLOPS (FP8 Tensor) compute, and power consumption up to 700 W (SXM) or 600 W (PCIe/NVL) depending on variant.

Is H200 mainly for AI/machine learning, or also for HPC/scientific workloads?

Both. While designed with generative AI and LLM inference/training in mind, H200’s high memory bandwidth and compute power make it very effective for scientific computing, simulations, data analytics, and other HPC tasks.

How much does an H200 chip cost?

There’s no widely published retail price. Previous-generation GPUs (e.g., H100) sold in the US$25,000–40,000 range for data-centre configurations; H200 is likely similarly expensive or more, given its higher performance, but the exact price depends on configuration and procurement route (cloud vs on-premises).

Can companies in China buy H200 GPUs now?

Yes — as of December 2025, the U.S. government has permitted exports of H200 chips to approved Chinese customers, under oversight from the U.S. Department of Commerce and subject to a 25% export fee.