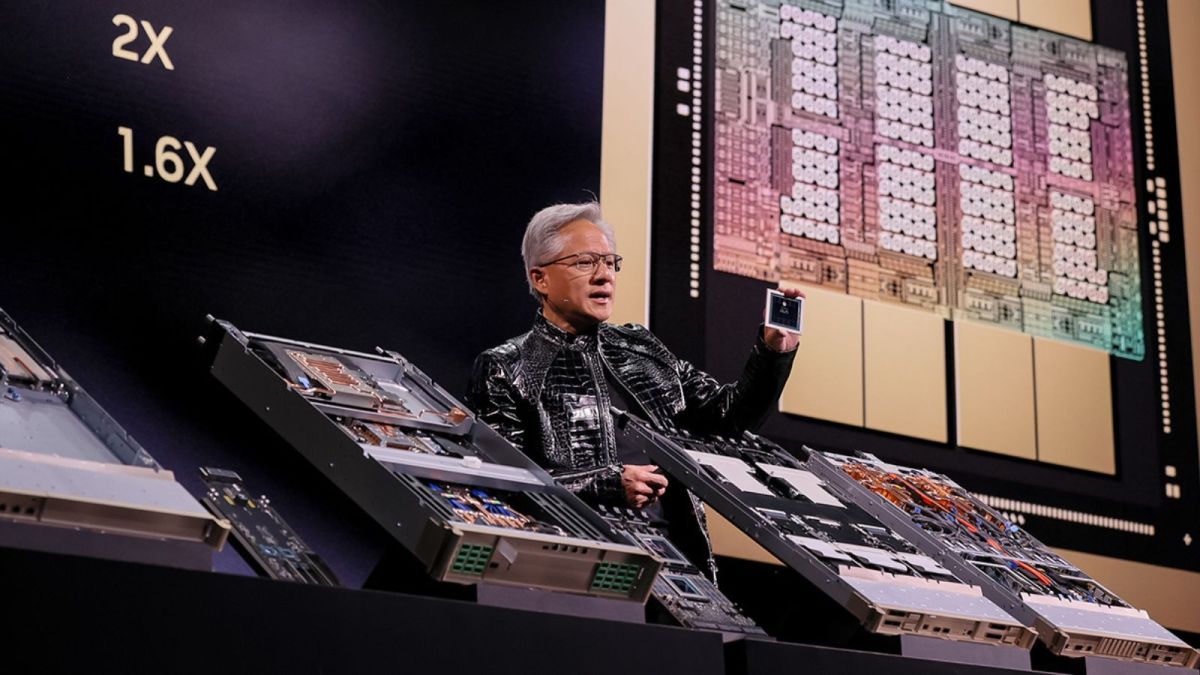

At CES 2026 in Las Vegas, Nvidia unveiled Rubin, its next-generation AI supercomputing platform designed to push artificial intelligence infrastructure into a new era of performance, efficiency, and scale. Named after American astronomer Vera Rubin, whose work reshaped our understanding of dark matter, the platform represents a foundational upgrade over Nvidia’s current flagship architecture — Blackwell.

Rather than a single chip, Rubin is a comprehensive, tightly co-designed AI computing system made up of six integrated components that work together as a unified rack-scale architecture intended for training and deploying the most demanding AI workloads.

This article explores what Rubin is, why it matters, how it works, its real-world implications, where and when it will be used, and what the future might hold.

Image: Nvidia

Why Rubin Matters: New Frontiers in AI Computing

Artificial intelligence workloads have rapidly outgrown traditional hardware models, particularly as organizations pursue large-scale reasoning models, agentic AI systems, and multi-step inference tasks. Older generations of AI infrastructure emphasized raw training power, but future models require tighter integration, greater bandwidth, and more efficient scaling across compute, memory, and networking layers.

Rubin addresses these demands in multiple ways:

- MRU (Massively Reduced Unit costs): Nvidia claims Rubin can reduce AI inference token costs by up to 10x compared to Blackwell, dramatically lowering operational expense for large-scale models.

- Training Efficiency: The platform can train advanced models with up to four times fewer GPUs, significantly boosting utilization and reducing the infrastructure footprint required for MoE (Mixture-of-Experts) systems.

- Performance Superior to Predecessors: Rubin GPUs alone are said to deliver five times the training performance of Blackwell chips, as measured through key AI metrics.

These improvements aren’t incremental upgrades — they are foundational shifts in how future AI systems will be designed, deployed, and scaled.

What Makes Rubin Different? Extreme Codesign at Scale

Unlike traditional chip architectures where CPUs, GPUs, networking, and storage are loosely connected, Rubin is built through “extreme codesign” — a process that integrates every component from silicon to networking with shared design goals.

The six core components of the Rubin platform include:

- Rubin GPUs: High-performance GPU units delivering up to 50 petaflops of NVFP4 AI compute.

- Vera CPUs: Custom ARM-based processors optimized for data movement and agentic reasoning workflows.

- NVLink-6: Next-generation high-bandwidth interconnect technology for scalable peer-to-peer communications.

- ConnectX-9 SuperNICs: Network interface controllers that dramatically improve data center throughput.

- BlueField-4 DPU: Data processing units that offload networking and security tasks from host CPUs.

- Spectrum-X Ethernet Switch: A networking fabric engineered for low latency and high throughput in rack-scale systems.

By designing these six layers together, Nvidia aims to eliminate traditional bottlenecks and ensure that the platform performs not as a collection of components, but as a cohesive supercomputing system.

Rubin vs Blackwell: What’s New and Better

Rubin represents the next evolutionary step beyond Nvidia’s Blackwell architecture, which has dominated recent AI infrastructure. The key differences include:

- Performance: Rubin’s GPUs can deliver significantly higher compute throughput — with up to 50 petaflops of NVFP4 performance per GPU.

- Memory Bandwidth: Advanced HBM4 memory and next-gen NVLink communications overcome bottlenecks that limited prior architectures, particularly in long-context and reasoning workloads.

- Efficiency: Rubin’s codesigned system-wide approach is expected to slash inference cost per token by up to 10x, effectively democratizing high-performance AI compute for a broader set of organizations.

- Scalability: The platform is built for large “AI factories” or supercomputing deployments, with rack-scale systems like the NVIDIA DGX Rubin NVL8 delivering hundreds of petaflops of inference performance and terabytes of high-bandwidth memory.

These architectural advances signal a shift in focus: from pure raw floating-point performance to system-level intelligence that addresses both training and real-world AI deployment workloads.

How Rubin Enables Future AI Models

Rubin isn’t just a faster chip — it’s optimized for the next generation of AI systems, including:

Large-Context Reasoning Models

Some emerging AI models require context windows that span millions of tokens — whether for long-form text generation, comprehensive coding tasks, or extended dialogues. Rubin’s increased memory bandwidth and storage integration help systems maintain these huge contexts efficiently.

Agentic AI Systems

“Agentic AI” refers to AI that can plan, act, and adapt across multi-step workflows. Such systems demand sustained reasoning, heavy data movement, and the ability to orchestrate workflows across many GPUs. Rubin’s architecture is built to handle these workloads more efficiently than older, siloed designs.

Mixture-of-Experts (MoE) Models

MoE models route tasks dynamically to specialized “expert” submodels, significantly increasing complexity and compute needs. Rubin’s integrated design reduces the total compute required to train and run these models.

Rubin in Real-World Context: Who Will Use It and Why

While the Rubin platform is marketed primarily at hyperscale data centers and enterprise AI operations, its implications extend far beyond those environments.

Hyperscalers and Cloud Providers

Industry giants like Microsoft, AWS, Google, and Meta are already lining up to adopt Rubin-based systems for their cloud AI offerings, enabling customers to access premium performance via cloud Infrastructure-as-a-Service (IaaS).

Enterprises Building AI Services

Large enterprises building AI-powered products — from financial forecasting tools to recommendation engines and autonomous systems — stand to benefit from Rubin’s reduced costs and improved scalability.

AI Model Developers

Organizations training next-generation AI models such as multimodal reasoning systems or domain-specific large models (e.g., healthcare, robotics, climate science) require flexible infrastructure that can handle both training and deployment workloads. Rubin’s integrated architecture is tailored for these workflows.

Emerging Use Cases – Robotics and Autonomy

Rubin’s architecture also supports systems that combine perception, planning, and real-time reasoning — such as autonomous vehicles and robotics platforms. Nvidia’s own autonomous driving model “Alpamayo” was announced alongside Rubin, reflecting the ecosystem approach.

Deployment Timeline and Ecosystem Partners

Nvidia plans to begin rolling out Rubin-based systems through partners in the second half of 2026, including rack-scale deployments like the NVL72 and DGX Rubin systems.

Ecosystem support has been a priority, with companies such as CoreWeave providing specialized managed services for Rubin-powered systems, and Red Hat collaborating on software stack optimizations including RHEL and OpenShift.

Addressing the Cost and Efficiency Challenge

One of the biggest bottlenecks in AI operations today is the cost per inference token — the expense associated with running a model to process a single token of input. As generative AI workloads scale, token costs consume a growing share of operational budgets. Rubin’s design tackles this directly:

- Up to 10x lower inference token costs compared to Blackwell-based systems.

- Up to 4x fewer GPUs needed for training complex models.

- Superior power efficiency and throughput across rack-scale deployments.

These improvements can materially reduce total cost of ownership (TCO) for AI infrastructure, enabling startups and enterprises to deploy AI at scales previously uneconomical.

Challenges and Industry Implications

Despite its promise, Rubin is not without challenges:

1. Software Ecosystem Requirements

Highly integrated hardware demands equally capable software stacks. Organizations may need to adapt or rebuild tools to fully exploit Rubin’s capabilities.

2. Energy Consumption

Large rack-scale systems consume significant power, requiring advanced cooling and power infrastructure, particularly in large data centers.

3. Competitive Landscape

Competitors like AMD and custom ASIC solutions from cloud providers (e.g., Google TPUs) remain relevant. While Rubin represents a leap forward, the long-term competitive balance will depend on performance, ease of adoption, and total cost benefits.

Future Outlook: Beyond 2026

Rubin is more than the next chip generation — it’s Nvidia’s architectural vision for AI infrastructure through the rest of this decade. With continued advances in AI reasoning, agentic systems, and generative workflows, supercomputing platforms like Rubin will increasingly underpin both research and production AI systems across industries.

As demand for AI compute scales toward zettaflops and beyond, Rubin and its successors may define the next wave of innovation in cloud infrastructure, autonomous systems, and real-time AI services. The platform’s introduction marks a critical inflection point where performance, cost efficiency, and system-level integration become central to AI adoption at scale.

FAQs

What exactly is Nvidia’s Rubin platform?

Rubin is Nvidia’s next-generation AI supercomputing platform consisting of six integrated components — GPU, CPU, networking, storage and system logic — designed for large-scale training and inference.

How does Rubin differ from Nvidia Blackwell?

Rubin offers higher performance, extreme codesign integration, up to 10x lower inference costs, and more efficient scaling for large AI models compared to Blackwell.

When will Rubin systems be available?

Rubin-based systems, including NVL72 racks and DGX Rubin supercomputers, are expected to ship through partners in the second half of 2026.

Who will use Rubin?

Hyperscalers, cloud providers, enterprises building AI services, and research institutions that require scalable, cost-efficient AI compute will be the primary users.

Does Rubin reduce AI infrastructure costs?

Yes — Nvidia claims up to 10x lower inference token costs and 4x fewer GPUs needed for complex model training, reducing total compute costs.

What kinds of workloads benefit most from Rubin?

Large language models with long context, agentic AI systems, mixture-of-experts models, and data-heavy reasoning tasks benefit significantly from Rubin’s architecture.

Is Rubin only hardware?

No — Rubin’s value comes from integrated hardware and system-level software orchestration optimized for AI workloads.

Will Rubin be used outside data centers?

Primarily designed for rack-scale and cloud environments, but components and derived architectures could influence edge and specialized AI infrastructure.

How does Rubin support model deployment?

By reducing compute costs and improving performance at scale, Rubin enables faster deployment cycles and cost-effective inference for large AI services.

What’s next after Rubin?

Architectural successors like Rubin Ultra and other performance-optimized systems are expected as AI workloads continue to grow in complexity.