Artificial intelligence is rapidly shifting toward local processing, a move driven by privacy concerns, faster inference, and independence from cloud-based APIs. In this wave of decentralized AI, two platforms have captured the community’s imagination: Ollama and LM Studio. Both of these tools make it easy to run large language models directly from the PC, but they come with different routes. One platform is minimal, developer-focused, and command-line driven, while the other is sleek, beginner-friendly, and GUI-based. If you’re wondering which one to pick, this detailed breakdown of Ollama vs LM Studio will give you a clear, experience-backed answer.

Quick Comparison: Key Differences Between Ollama and LM Studio

| Feature | Ollama | LM Studio |

| User Interface | Command-line interface (CLI) | Graphical user interface (GUI) |

| Ease of Use | Ideal for developers | Ideal for beginners |

| Setup & Installation | Requires Terminal commands | Plug-and-play desktop installation |

| Customization | High Modelfile supports advanced configuration | Moderate focus on chat and local model loading |

| Model Access | Uses its own registry (ollama pull) | Connects directly with Hugging Face |

| Performance | Faster and more resource-efficient | Slightly heavier but smoother visually |

| Integration | Easily embedded in apps & pipelines | Limited to local GUI usage or APIs |

| Open Source | Fully open-source and community-driven | Proprietary but free |

| Platform Support | macOS, Linux, Windows (preview) | macOS, Windows, Linux (beta) |

| suitable For | Developers, researchers, AI engineers | Educators, students, creators, testers |

Understanding Ollama: Command-Line Precision and Speed

Ollama is a local LLM runner that has become immensely popular among developers who love precision, flexibility, and speed. It runs on a command-line interface (CLI), making it lightweight and scriptable.

Unlike typical AI chat tools that rely on cloud connectivity, Ollama lets you run advanced models like LLaMA 2, Mistral, or Codellama directly on your device. It’s fully open-source and built on the llama.cpp backend, ensuring efficient inference even on standard hardware.

What truly differentiates Ollama is the Modelfile, a configuration file that defines how the model behaves. Developers can create variations, assign system prompts, or fine-tune personality settings. Think of it as the “Dockerfile” of local LLMs.

Key strengths of Ollama include:

- Local-first architecture: Keeps all data on your machine, enhancing privacy.

- Quick commands: Models can be pulled and run instantly using CLI.

- Flexible integration: Suitable for embedding into custom apps or backend workflows.

- Optimized execution: Low memory footprint and fast response time.

- Community-driven updates: Its open-source nature invites transparency and innovation.

However, for non-developers, the lack of a GUI can feel like a hurdle. Ollama’s strength lies in control and performance, not hand-holding and that’s exactly why developers love it.

Exploring LM Studio: Local AI Made Visually Simple

LM Studio, on the other hand, focuses on accessibility and user experience. This tool is specifically designed as a desktop application that helps in running large language models that feel smooth.

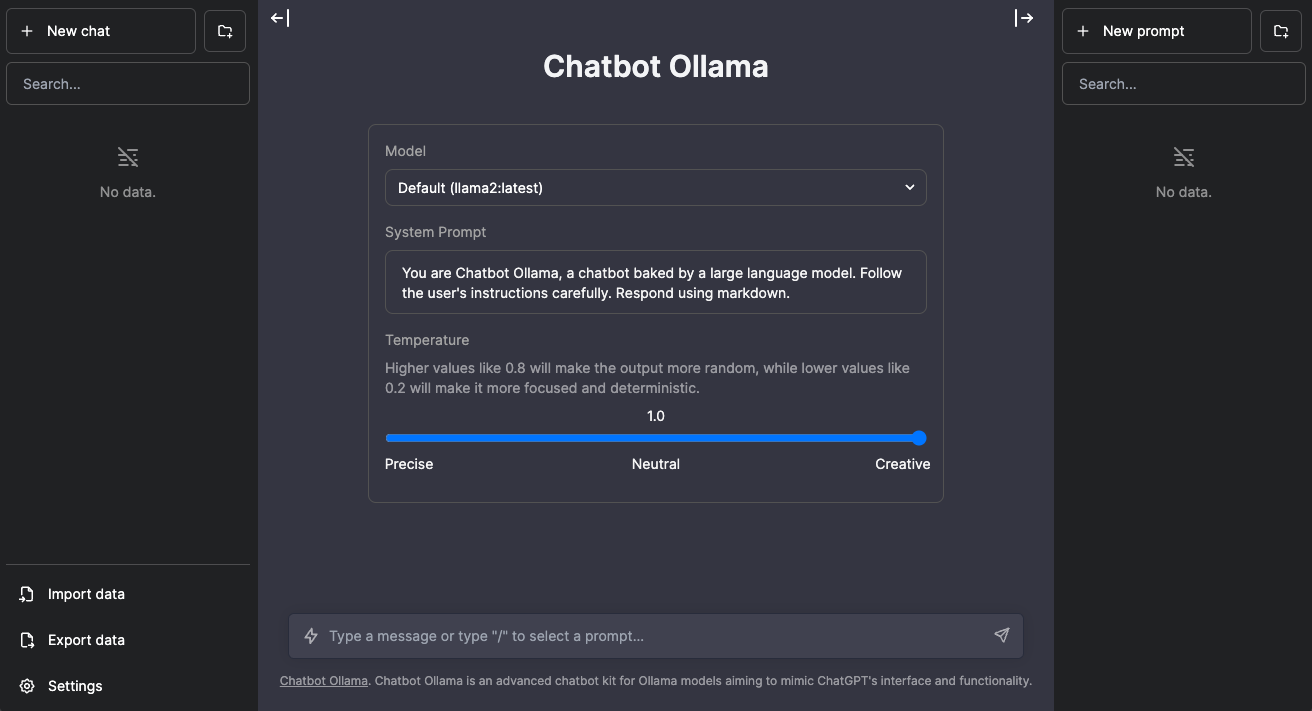

The graphic user interface that it offers also resembles that of ChatGPT, providing a clean chat window that also allows you to load models, experiment with different prompts, and analyse responses in real time. This interface instantly eliminates the need for command-line operations, offering a Suitable system to the users who just want to experiment without any technical setup.

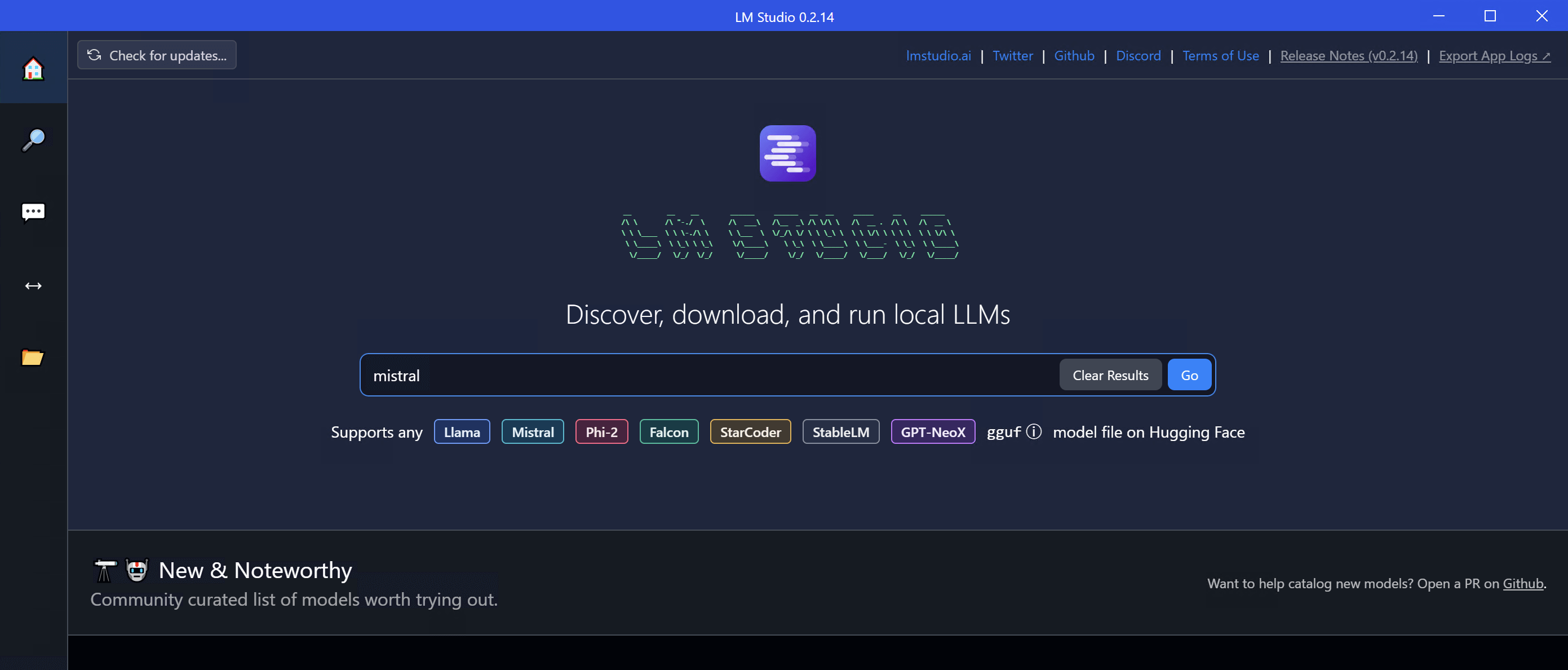

LM Studio connects seamlessly with Hugging Face, allowing you to browse and download models like Mistral, LLaMA, or Vicuna directly inside the app. It also runs an OpenAI-compatible local server, meaning developers can integrate it into existing applications using the same API structure as OpenAI but without sending data to the cloud.

What makes LM Studio appealing:

- Instant setup: Download, install, and chat with a model in minutes.

- Visually engaging: A GUI that feels intuitive for non-technical users.

- Hugging Face integration: Access thousands of community-trained models.

- Offline capability: Run conversations without Internet dependency.

- API flexibility: For developers who want some integration freedom.

The main limitation is that it doesn’t offer as much control or customization at the model level as Ollama. It’s more of an interface for exploration, not a platform for engineering.

Ollama vs LM Studio: Installation & Setup Experience

Ollama

Ollama installation is straightforward for developers. A simple command like brew install ollama (on macOS) gets it running, and within seconds, you can pull your first model using:

ollama pull llama2

ollama run llama2

It’s fast, minimal, and designed for repeatable use in workflows. Developers can integrate it directly with scripts, cron jobs, or full-fledged applications.

LM Studio

In contrast, LM Studio uses a download-and-install setup typical of desktop apps. No command line, no configuration files. You launch it, choose a model, and start chatting.

This difference highlights a core truth: Ollama empowers technical users, while LM Studio enables creative users.

Interface & Workflow: How You Interact Matters

This is arguably the biggest divide in the Ollama vs LM Studio debate.

Ollama’s CLI allows for automation, batch testing, and embedding. It’s not flashy, but it’s fast and extremely powerful for those who know their way around a terminal. It’s ideal for AI developers who integrate LLMs into products or APIs.

LM Studio’s GUI, by contrast, focuses on comfort. It brings a conversational experience to local AI — like ChatGPT, but entirely offline. You can save sessions, reload models, and tweak parameters visually.

So, if you’re building something, Ollama fits. If you’re exploring and learning, LM Studio feels right.

Model Compatibility & Access

Both platforms rely on similar backends (llama.cpp), but they differ in how users access and manage models.

- Ollama: Maintains its own curated model repository with optimized LLaMA, Mistral, and Codellama builds. You can pull models directly using ollama pull.

- LM Studio: Offers broader discovery through Hugging Face integration, giving access to hundreds of community-contributed models in GGUF format.

Verdict: LM Studio wins in diversity, but Ollama delivers cleaner optimization and faster startup times.

Performance and Optimization

When comparing Ollama vs LM Studio in performance, the results often come down to system resources and user intent.

- Ollama runs models efficiently with minimal overhead, thanks to its CLI architecture and smart memory management. It’s ideal for repeated tasks, automation, or embedded use in backend systems.

- LM Studio delivers steady, smooth performance but requires more GPU and RAM to handle its graphical interface. It’s better suited for interactive sessions rather than high-throughput inference.

Benchmarks from developer communities show Ollama consistently loading and responding faster, especially on Linux setups.

Customization and Developer Control

If you like to tinker, Ollama gives you the tools. With Modelfile, developers can define system prompts, tune token limits, or build chains of models. You can even automate how LLMs interact with external data sources.

LM Studio, meanwhile, provides a simpler configuration menu for temperature, token length, and system prompt but lacks deeper control or scripting options.

Verdict: Ollama dominates in customization and developer flexibility.

Why Do People Like Ollama More Than LM Studio?

Despite LM Studio’s beautiful interface and beginner-friendly appeal, many developers and tech enthusiasts gravitate toward Ollama, and for good reason.

- It’s Open Source: Transparency builds trust. Developers prefer tools where they can audit the code, suggest changes, and contribute improvements.

- Performance Efficiency: Ollama is lighter, faster, and uses less memory compared to GUI-based alternatives.

- Automation & Integration: The CLI design allows seamless embedding into apps, pipelines, or automation scripts, something LM Studio doesn’t yet excel at.

- Developer-Centric Flexibility: The Modelfile feature enables deep control over how models behave, something LM Studio’s GUI cannot replicate.

- Community Backing: The open-source community around Ollama grows faster, pushing frequent updates, patches, and model optimizations.

- Cross-Platform Strength: Its Linux support gives it an edge among developers and enterprises.

- Docker-Like Familiarity: Developers who use Docker or terminal-based tools find Ollama’s syntax instantly comfortable.

Simply put, Ollama feels built by developers, for developers, while LM Studio feels built for general AI exploration.

That doesn’t mean LM Studio isn’t valuable, it’s fantastic for educators, content creators, or anyone wanting an offline ChatGPT experience. But for those chasing efficiency, automation, and open-source reliability, Ollama delivers exactly what they want.

Use-Case Based Recommendations

| Use Case | Recommended Platform |

| Beginners & Educators | LM Studio |

| Developers Building LLM Apps | Ollama |

| Offline Prompt Testing | LM Studio |

| Automation & Pipelines | Ollama |

| Integration with APIs or Scripts | Ollama |

| Non-Technical AI Exploration | LM Studio |

| Fine-Tuning Model Behavior | Ollama |

| Lightweight Setup for Laptops | Ollama |

| Visual Interaction for Learning | LM Studio |

TechNow’s Perspective: Empowering Local AI Experiences

TechNow believes that advanced systems such as LM studio and Ollama truly showcase the future of intelligent AI, in which innovation and automation are common aspects.

TechNow specialises in helping businesses build advanced AI systems, integrate them with futuristic technology, and optimise their operations smartly, which eventually leads to a more secure, faster, and privacy-first environment. If you are planning to automate your workflow, create an enterprise-level AI ecosystem or want to experiment with local LLMs, TechNow can be a Suitable choice.

Because in the world of AI, choosing the right tool it’s a strategic decision. And with TechNow by your side, you’ll always stay ahead of the curve.

Final Verdict: Choosing Between Ollama and LM Studio

Choosing between Ollama vs LM Studio ultimately depends on what you value most: control or convenience.

No matter what you deal in, technical research, web development or just technical use, everyone needs flexibility, and with that thing in mind, Ollama is always a clear winner. It offers you faster, easy integration and open-source tools into a complex work environment. You can script your work, scale it and even mould it as per your needs.

For local AI exploration, people who want a clean, visual, and chat-style interface find LM Studio unbeatable in simplicity. It’s like having your own offline ChatGPT without worrying about privacy or subscriptions.

Both are impressive, but they cater to different mindsets.